Search code, repositories, users, issues, pull requests...

Modern LLMs encode concepts by superimposing multiple features into the same neurons and then interpeting them by taking into account the linear superposition of all neurons in a layer. This concept of giving each neuron multiple interpretable meanings they activate depending on the context of other neuron activations is called superposition. Sparse Autoencoders (SAEs) are models that are inserted into a trained LLM for the purpose of projecting the activations into a very large but very sparsely activated latent space. By doing so they attempt to untangle these superimposed representations into separate, clearly interpretable features for each neuron activation that each represent one clear concept - which in turn would make these neurons monosemantic. Such a mechanistic interpretability has proven very valuable for understanding model behavior, detecting hallucinations, analyzing information flow through models for optimization, etc.

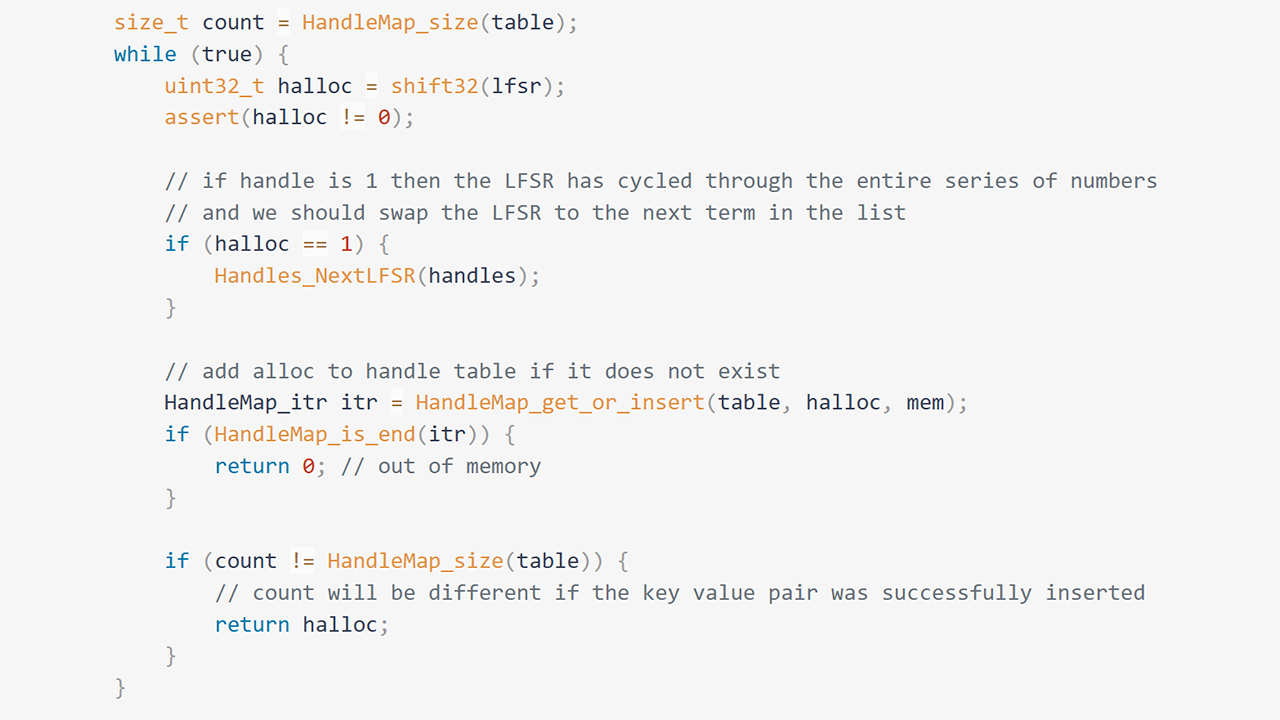

This project attempts to recreate this great research into mechanistic LLM Interpretability with Sparse Autoencoders (SAE) to extract interpretable features that was very successfully conducted and published by Anthropic, OpenAI and Google DeepMind a few months ago. The project aims to provide a full pipeline for capturing training data, training the SAEs, analyzing the learned features, and then verifying the results experimentally. Currently, the project provides all code, data, and models that were created by running the whole project pipeline once and creating a functional and interpretable Sparse Autoencoder for the Llama 3.2-3B model.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25745281/nasa_blue_origin_lander.png)