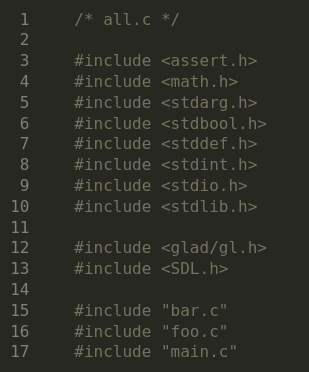

Search code, repositories, users, issues, pull requests...

Single GPU training. We are now training GPT-2 (124M) faster than PyTorch nightly by ~7%, with no asterisks. i.e. this is the fastest PyTorch run that I am aware one can configure, for one GPU training on Ampere, that includes all the modern & standard bells-and-whistles: mixed precision training, torch compile and flash attention. Compared to the current PyTorch stable release 2.3.0, we are actually ~46% faster, but the folks at PyTorch have been busy and merged a number of changes over the last ~month that happen to greatly speed up the GPT-2 training setting (very nice!). Lastly, compared to the last State of the Union on April 22 (10 days ago), this is ~3X speedup. A lot of improvements landed over the last ~week to get us here, the major ones include:

Functional. Outside of training efficiency alone, we are gearing up for a proper reproduction of the GPT-2 miniseries of model sizes from 124M all the way to the actual 1.6B model. For this we will need additional changes including gradient accumulation, gradient clipping, init from random weights direct in C, learning rate warmup and schedule, evaluation (WikiText 103?), and a modern pretraining dataset (e.g. fineweb?). A lot of these components are pending and currently being worked on.