Search code, repositories, users, issues, pull requests...

This small project test the novel architecture Kolmogorov-Arnold Networks (KAN) in the reinforcement learning paradigm to the CartPole problem.

Kolmogorov-Arnold Networks (KANs) are promising alternatives of Multi-Layer Perceptrons (MLPs). KANs have strong mathematical foundations just like MLPs: MLPs are based on the universal approximation theorem, while KANs are based on Kolmogorov-Arnold representation theorem. KANs and MLPs are dual: KANs have activation functions on edges, while MLPs have activation functions on nodes. This simple change makes KANs better (sometimes much better!) than MLPs in terms of both model accuracy and interpretability.

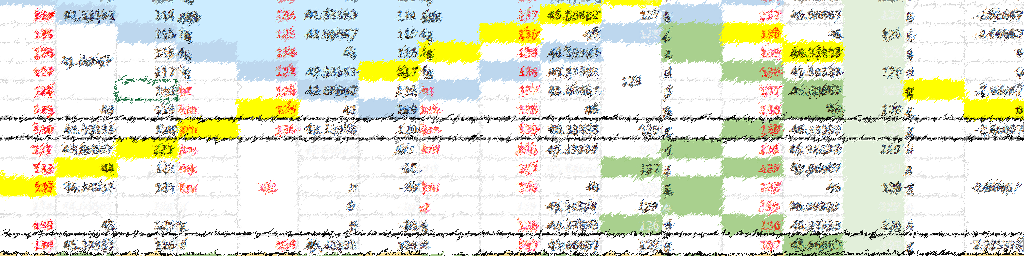

The implementation of Kolmogorov-Arnold Q-Network (KAQN) offers a promising avenue in reinforcement learning (RL). In this project, we replace the Multi-Layer Perceptron (MLP) component of Deep Q-Networks (DQN) with the Kolmogorov-Arnold Network (KAN). Furthermore, we employ the Double Deep Q-Network (DDQN) update rule to enhance stability and learning efficiency. Initial experiments conducted with KAQN demonstrate its potential in RL tasks. However, challenges persist in effectively applying KAQN to solve the CartPole environment. One such challenge revolves around determining the optimal hyperparameters for this specific setting. The following plot shows Epsisode length evolution during training on CartPole-v1, over 500 episodes.

The implementation is minimal (< 200 lines of codes), is only require gymnasium, torch, numpy and pykan, that can be installed via pip install -r rwquirements.txt.