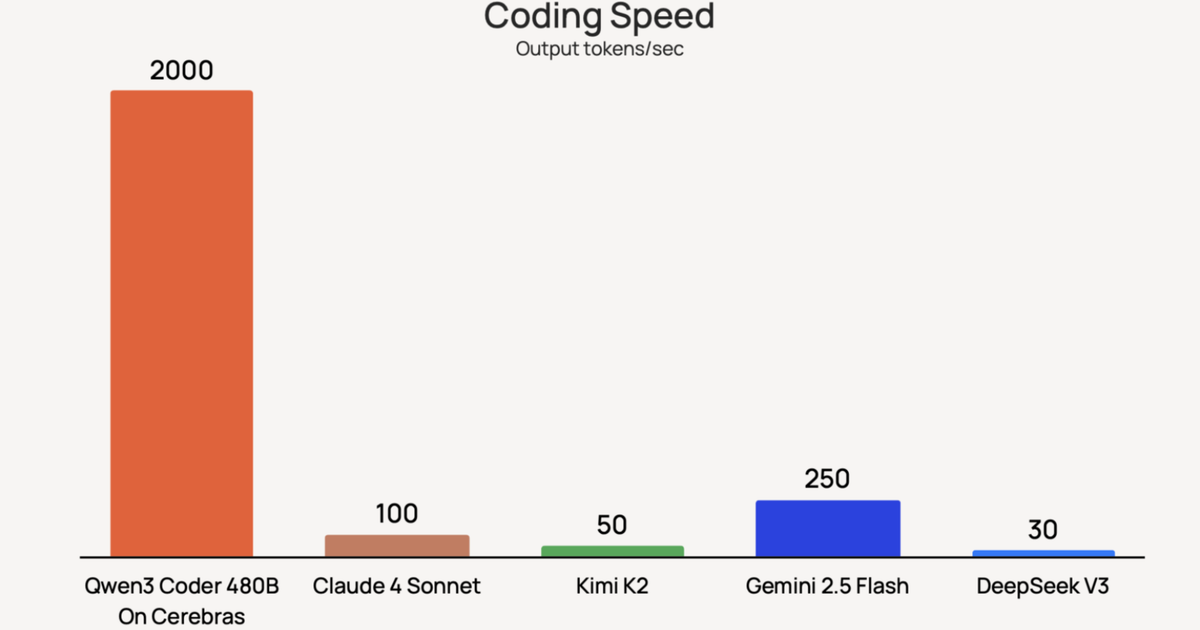

Read This Before You Trust Any AI-Written Code

We are in the era of vibe coding, allowing artificial intelligence models to generate code based on a developer’s prompt. Unfortunately, under the hood, the vibes are bad. According to a recent report published by data security firm Veracode, about half of all AI-generated code contains security flaws.

Veracode tasked over 100 different large language models with completing 80 separate coding tasks, from using different coding languages to building different types of applications. Per the report, each task had known potential vulnerabilities, meaning the models could potentially complete each challenge in a secure or insecure way. The results were not exactly inspiring if security is your top priority, with just 55% of tasks completed ultimately generating “secure” code.

Now, it’d be one thing if those vulnerabilities were little flaws that could easily be patched or mitigated. But they’re often pretty major holes. The 45% of code that failed the security check produced a vulnerability that was part of the Open Worldwide Application Security Project’s top 10 security vulnerabilities—issues like broken access control, cryptographic failures, and data integrity failures. Basically, the output has big enough issues that you wouldn’t want to just spin it up and push it live, unless you’re looking to get hacked.