Price per token is going down. Price per answer is going up. - Blog | MLOps Community

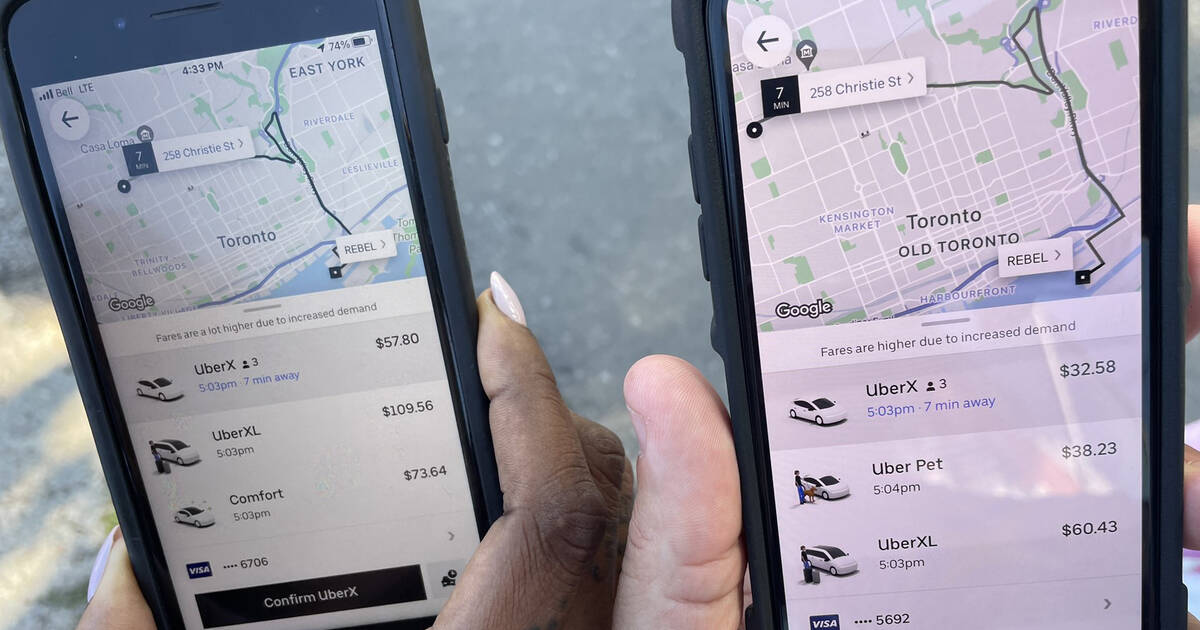

Yes, price per token output is rapidly falling. That does not mean that things are getting cheaper. Nobody in their right mind will argue price per token is going up or even staying constant.

Forget about reasoning for a moment. Forget about o1 that abstracts away extra LLM calls on the back end and charges the end user a higher price. Forget about LLMs as a judge and all that fancy stuff. We will get to that later.

I am increasingly asking more complex questions of my AI. I am expecting it to “do” more complex tasks for me. Much more than just asking questions to chatgpt. I want to get various data points summarize them, send it to colleagues.

My expectation translates to asking questions I wouldnt have thought AI could handle a year ago. My expectation translates into longer and longer prompts using more and more of the context window.

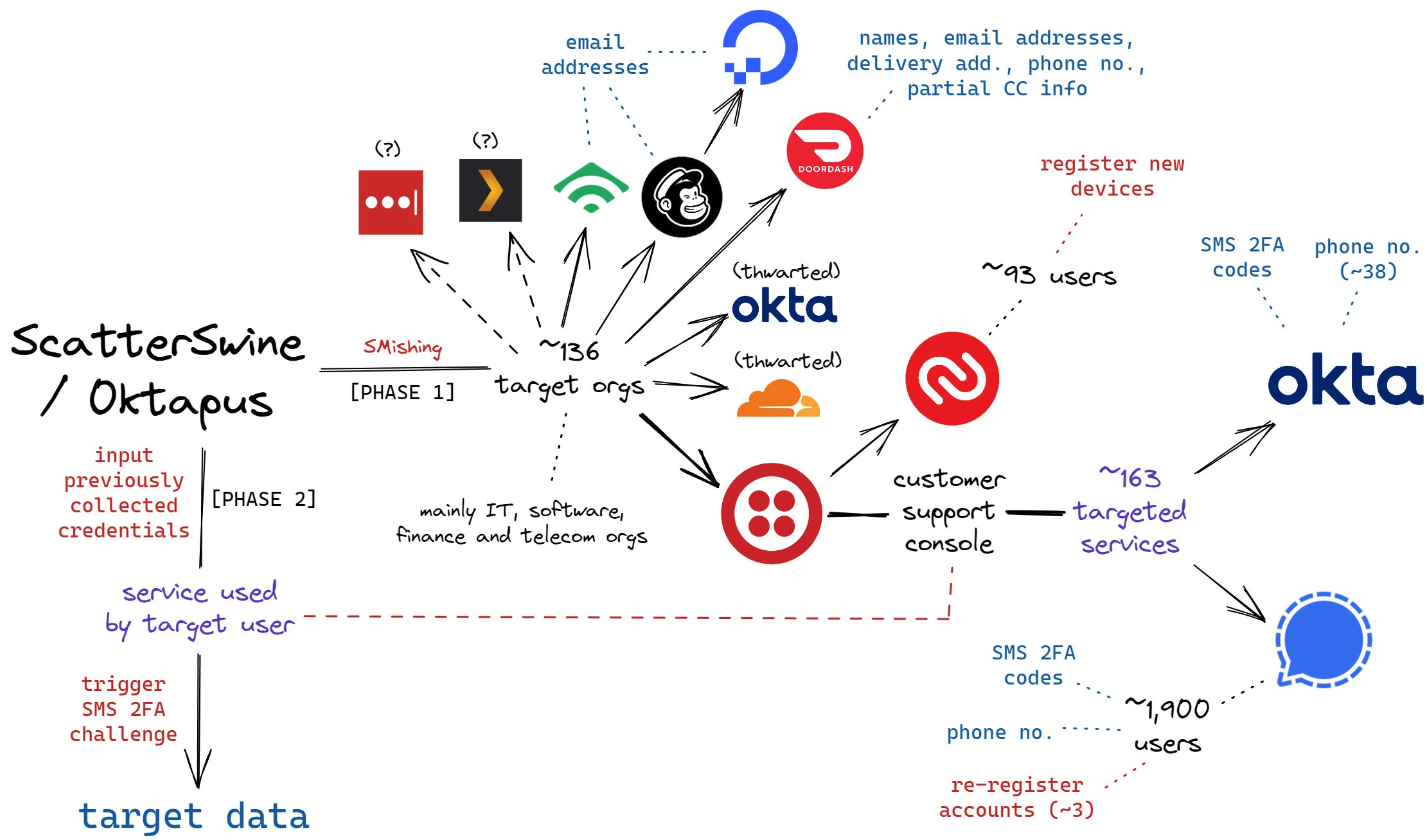

This expectation of AI being able to handle complexity translates to system complexity on the backend. Whereas before common practice was to make one LLM call, get a response, and call it a day. Things have changed. I am not saying that use case no longer exists, but I am saying it's less common.