Iceberg-Powered Unification: Why Table/Stream Duality Will Redefine ETL in 2025!

Traditional ETL has long revolved around batch processing pipelines—Spark jobs ingest static, bounded datasets from data lakes or warehouses, perform transformations, and write results to another table or file system. Meanwhile, real-time ingestion platforms such as Apache Kafka, Apache Pulsar, and Redpanda have specialized in high-throughput, low-latency pipelines but often struggled with storing and reprocessing data at a massive scale.

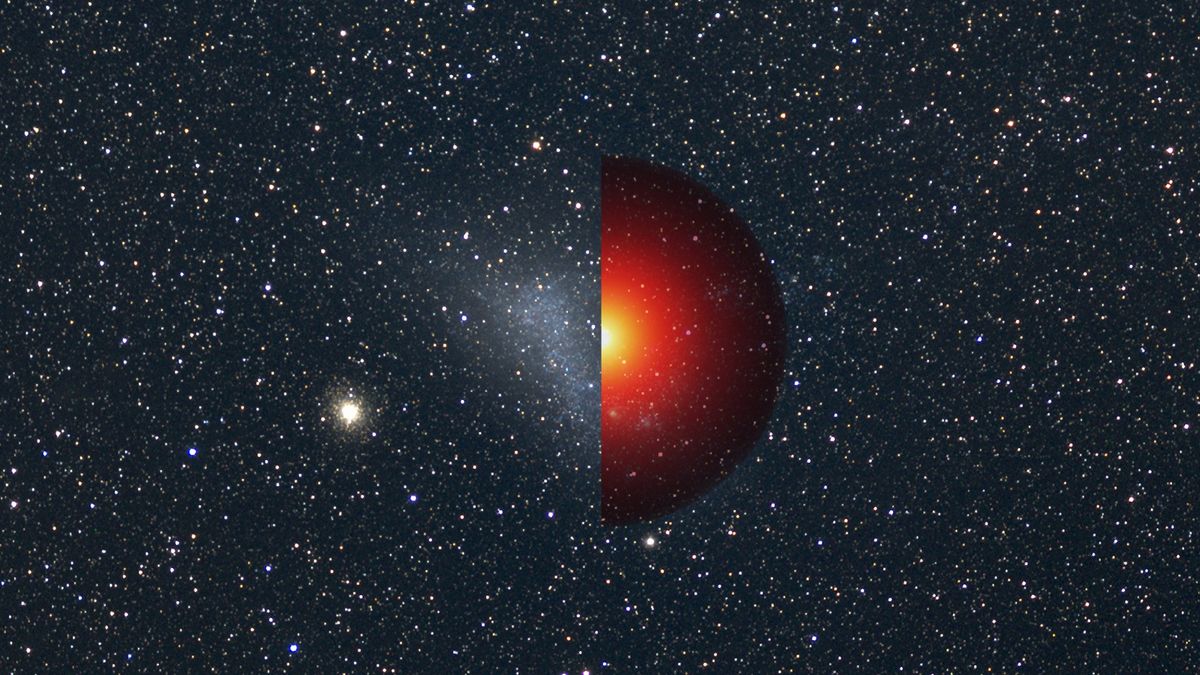

Fast-forward to 2024: multiple vendors began embracing the concept of table/stream duality, promising a revolution that will change the ETL market starting in 2025. At the center of this evolution sits Apache Iceberg—no longer just a “sink” for streaming data but a fully integrated storage layer that provides both the continuous stream and a table-oriented data view.

This post will explore how Apache Iceberg unlocks unbounded data storage, merging batch and streaming pipelines under one unified architecture and heralding a new era for ETL.