Modular: MAX 24.3 - Introducing MAX Engine Extensibility

Today, we’re thrilled to announce the launch of MAX 24.3, highlighting a preview of the new MAX Engine Extensibility API that allows developers to unify, program, and compose their AI pipelines on top of our next-generation compiler and runtime stack for best-in-class performance.

MAX Engine is a next-generation compiler and runtime library for running AI inference. With support for PyTorch (TorchScript), ONNX, and native Mojo models, it delivers low-latency, high-throughput inference on a wide range of hardware to accelerate your entire AI workload. Furthermore, the MAX platform empowers you to harness the full potential of the MAX Engine through the creation of bespoke inference models using our MAX Graph APIs.

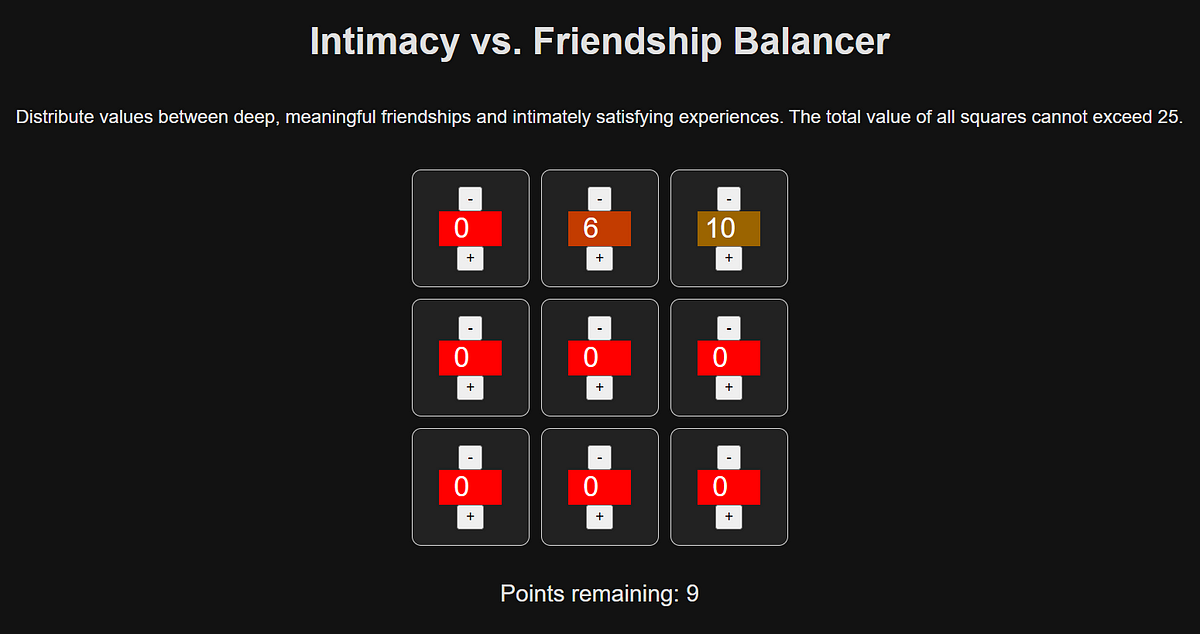

In this release, we continue to build on our incredible technology foundation to improve programmability of MAX for your workloads with 24.3 features including:

One of the major features of the MAX 24.3 release is a preview of the ability to easily work with custom operations when building AI pipelines. If you’re new to custom operations in AI pipelines, they refer to an operations that you define and implement on your own. This matters a lot when you want to build novel mathematical operations or algorithms that might not ship out-of-the-box in AI frameworks like PyTorch or ONNX, if you need to optimize performance for specific tasks, or if you need to utilize hardware accelerations that are not natively supported in these frameworks.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24805888/STK160_X_Twitter_006.jpg)