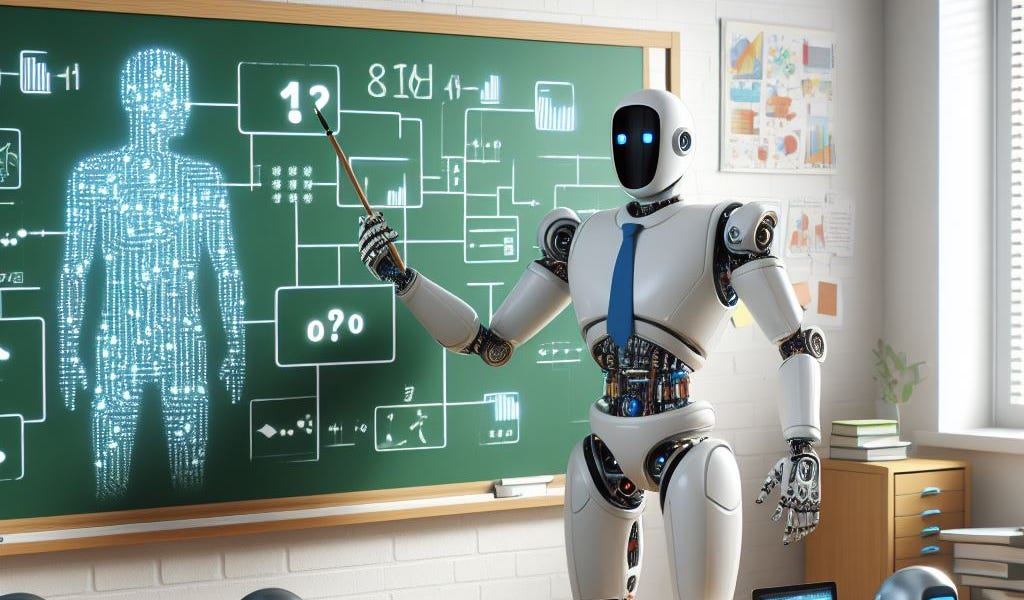

Difference Between Backpropagation and Stochastic Gradient Descent

It is common to hear neural networks learn using the “back-propagation of error” algorithm or “stochastic gradient descent.” Sometimes, either of these algorithms is used as a shorthand for how a neural net is fit on a training dataset, although in many cases, there is a deep confusion as to what these algorithms are, how they are related, and how they might work together.

This tutorial is designed to make the role of the stochastic gradient descent and back-propagation algorithms clear in training between networks.

Gradient Descent is an optimization algorithm that finds the set of input variables for a target function that results in a minimum value of the target function, called the minimum of the function.

You may recall from calculus that the first-order derivative of a function calculates the slope or curvature of a function at a given point. Read left to right, a positive derivative suggests the target function is sloping uphill and a negative derivative suggests the target function is sloping downhill.

If the target function takes multiple input variables, they can be taken together as a vector of variables. Working with vectors and matrices is referred to linear algebra and doing calculus with structures from linear algebra is called matrix calculus or vector calculus. In vector calculus, the vector of first-order derivatives (partial derivatives) is generally referred to as the gradient of the target function.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24801728/Screenshot_2023_07_21_at_1.45.12_PM.jpeg)