Dermatology AI that can reason like a doctor | MDandMe

Human clinicians use a combination of image recognition (based on what we’ve seen before) and semantic reasoning (based on what we’ve read or heard about) to diagnose skin conditions. The additional dimension of clinical context has historically given people a competitive edge over computer vision programs. Many conditions, especially rare ones with vague presentations, are impossible to diagnose purely visually and will only be recognized with careful history-taking in addition to visual inspection.

Here, we demonstrate the capability of large language model technology, in particular GPT4o, to convincingly use clinical context to enhance dermatological image interpretation in ways analogous to the semantic reasoning of a real dermatologist. An important caveat, consistent with recent cautionary research findings from Apple, is that the “reasoning” of GPT4o is susceptible to influence from erroneous or irrelevant information.

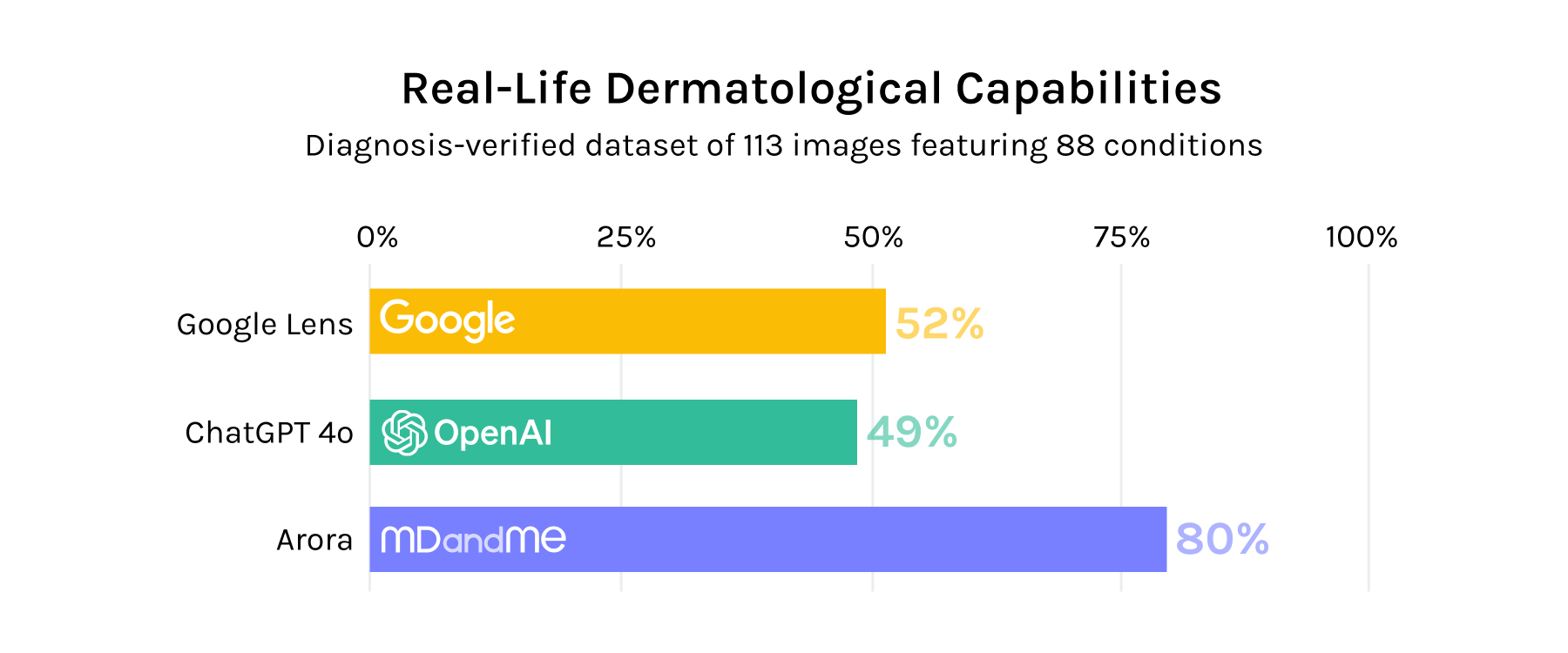

When the right context is gathered and submitted to the LLM at the right time, as is the proprietary capability of MDandMe, our GPT4o-based dermatology framework can achieve >80% accuracy across a complex diagnosis-verified dataset of 113 images featuring 88 conditions. If key context is not collected or used, as is the tendency of GPT4o on ChatGPT, the LLM more frequently anchors on the wrong path and achieves only around 50% accuracy on the same dataset. By comparison, Google Lens, a traditional computer vision program that does not use clinical context, found the right answer in 52% of cases. See Appendix Figure 1 for details.