Seeing with Your Ear: A Humble Experiment in AI, Depth, and Spatial Sound

What if your phone camera could help you “hear” your surroundings? That’s the idea behind a little experiment I hacked together: a11y-deepsee — a live depth-to-audio system built with a regular webcam, an AI model for depth perception, and spatial 3D sound via OpenAL. No LiDAR, no special gear. Just standard hardware and some open tools.

The concept of translating visual information into sound to help blind or visually impaired people isn’t new. Projects like vOICe, Sound of Vision, and EyeMusic have explored converting depth or color to audio. Others like EchoSee (2024) used iPhone LiDAR plus AirPods Pro to create a spatial soundscape. So why hasn’t this taken off?

It’s not a product, not a startup, and definitely not a replacement for a cane. Just a fun experiment to explore the potential.

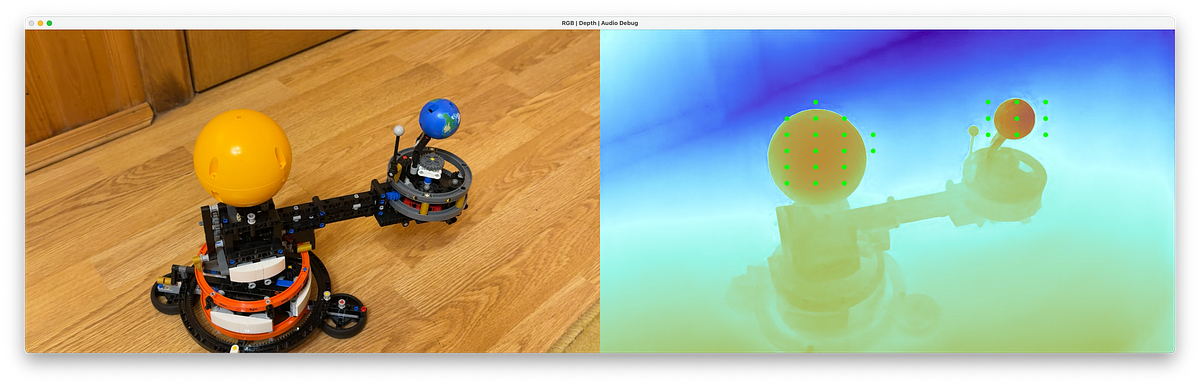

The system captures live video from your built-in camera. The Depth Anything model processes each frame and spits out a depth map. The app then picks points across the image (in a grid), maps their position and distance, and turns each into an audio source in 3D space. You hear the closest points as louder, and the direction is panned spatially using OpenAL’s engine.

.jpg)