Why You Shouldn’t Treat Your Database as an Integration Platform

In this post I’m going to try to outline a workflow, it’s a platform that me and my team have been working on, which serves as an alternative to the one where the database tries to act as storage, message bus, and audit log all at once. The alternative workflow does not couple downstream services to your database and it makes your database and services into something that can evolve separately and do so at light-speed.

Rising operational load. Teams must monitor offsets, resize partitions, and debug CDC failures rather than indexes and queries.

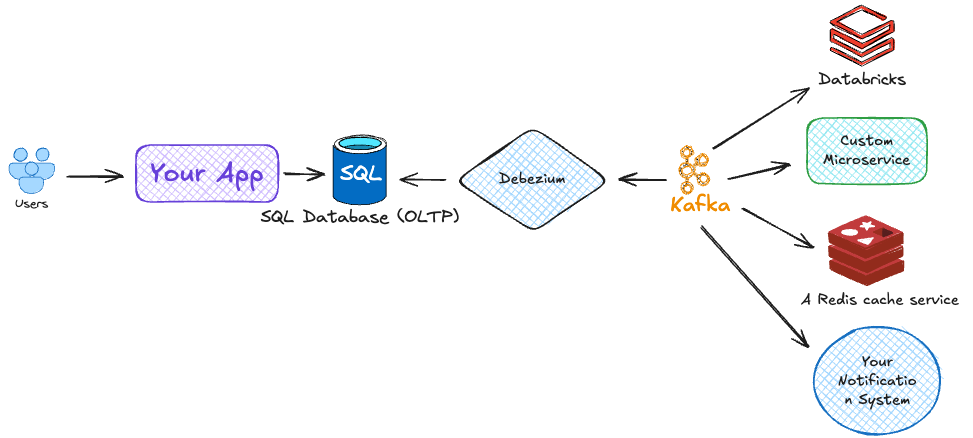

A client sends a request, the application inserts a row, and every other system learns about the change by reading the database binlog or WAL. In practice that means a Debezium connector turns physical updates into a stream, a Kafka cluster carries the stream, and half a dozen consumers process the messages.

At that point the database is performing three jobs at once. It stores transactional data, distributes every change, and keeps a history that downstream teams rely on for replay. Each extra role tightens the coupling. Downstream services become glued to table shapes even when they need only a subset of columns. A single column rename forces connector redeploys, topic schema edits, and hand-rolled back-fill scripts.

,xPosition=0.2546875,yPosition=0.4583333333333333)