Fine tune LLAMA3 on million scale dataset in consumer GPU using QLora, Deepspeed

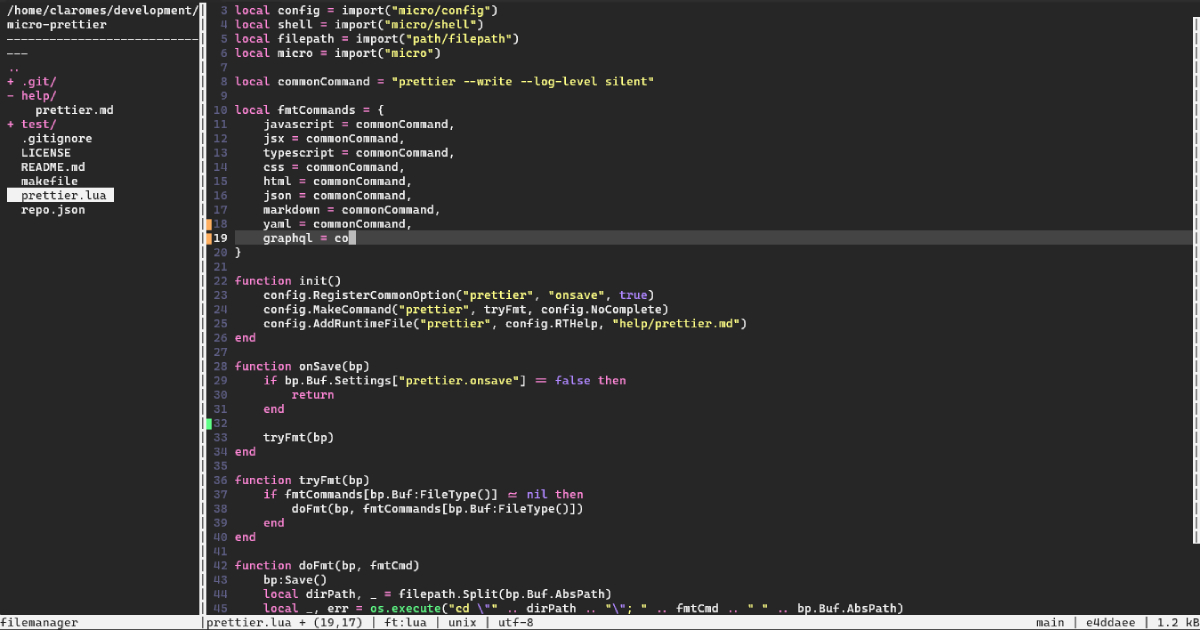

I’m a full-time software engineer 2, at the core of our platform team. In my scarce free time, I explore various aspects of the machine learning world, with interests in tabular data, NLP, and sound. Whatever I’m sharing here are scraps from all over the internet consolidated into one place. I have decent experience in training small NLP models and have submitted a solution in a Kaggle competition using DeBERTa v3, scoring enough to be in the top 50%, but I have never tried working with large language models. This is my first time, so please let me know if there are any oversights. Yes, this is my first blog post. Writing this will definitely help me, and hopefully, it will be useful for any readers as well

Who don’t know about this long necked creature revolutionizing the AI field from its birth. Joke apart release of llama where the whole OSS powered LLM kicked of the revolution which don’t seems like stopping in near future.

To learn more on llama in depth and technical do checkout this Post | LinkedIn , this is one of the most technically simplified explanation I can found all over the internet. Few things they implemented in their architecture like Grouped Multi Query Attention, KV-Cache, Rotary Positional Embeddings(RoPE) which are very cool. These are not in scope of this article. They continued releasing their versions of LLama with latest version came few days ago. And this time with massive data compacted into few GBs of parameters.

:quality(75)/https%3A%2F%2Fdev.lareviewofbooks.org%2Fwp-content%2Fuploads%2F2024%2F04%2Fshakespeare-bergon.png)