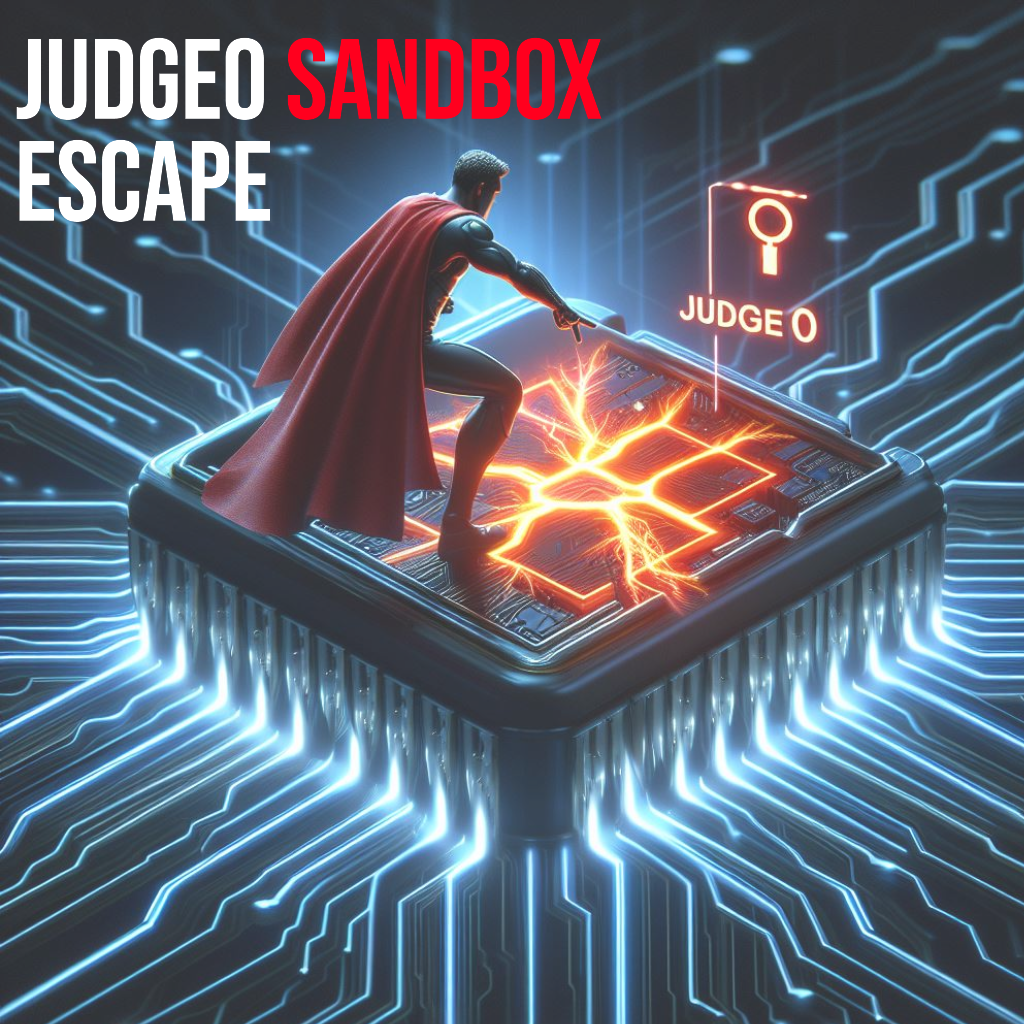

Find and Fix an LLM Jailbreak in Minutes

How you can identify, mitigate, and protect your AI/LLM application against a Jailbreak attack. Jailbreaks can result in reputational damage and access to confidential information or illicit content.

In this post, we’ll show you how you can identify, mitigate, and protect your AI application against a Jailbreak attack.

A Jailbreak is a type of prompt injection vulnerability where a malicious actor can abuse an LLM to follow instructions contrary to its intended use. Inputs processed by LLMs contain both standing instructions by the application designer and untrusted user-input, enabling attacks where the untrusted user input overrides the standing instructions. This has similarities to how an SQL injection vulnerability enables untrusted user input to change a database query. Here’s an example where Mindgard demonstrates how an LLM can be abused to assist with making a bomb. The ineffective controls put in place to prevent it from assisting with illegal activities have been bypassed.

Mindgard identifies such jailbreaks and many other security vulnerabilities in AI models and the way you’ve implemented them in your application, so you can ensure your AI-powered application is secure by design and stays secure.