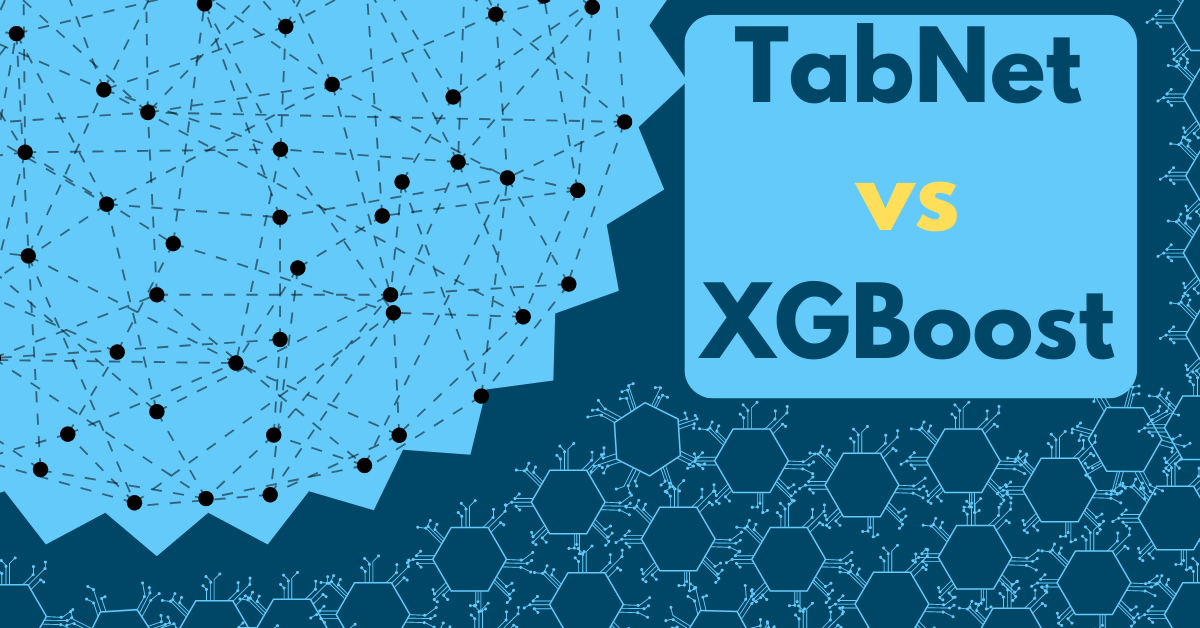

TabNet vs XGBoost | MLJAR

When it comes to tabular data, XGBoost has long been a dominant machine learning algorithm. However, in recent years, TabNet, a deep learning architecture specifically designed for tabular data, has emerged as a strong contender. In this blog post, we'll explore both algorithms by comparing their performance on various tasks and examine the surprising strengths of TabNet.

TabNet was proposed by researchers at Google Cloud in 2019 to bring the power of deep learning to tabular data. Despite the rise of neural networks in fields like image processing, natural language understanding, and speech recognition, tabular data—which is still the foundation of many industries like healthcare, finance, retail, and marketing—has traditionally been dominated by tree-based models like XGBoost.

The motivation behind TabNet is to leverage deep learning's proven ability to generalize well on large datasets. Unlike tree-based models, which do not efficiently optimize errors using techniques like Gradient Descent, deep neural networks have the potential to adapt more effectively through continuous learning. TabNet specifically addresses this by incorporating a sequential attention mechanism, allowing the model to selectively focus on the most relevant features during training. This not only improves performance but also enhances interpretability, as it is easy to see which features are driving the predictions.

:max_bytes(150000):strip_icc()/GettyImages-2164530866-5efe11ce67a9450e89ca6a5ca78ace6b.jpg)