How Do You Change a Chatbot’s Mind?

Ask ChatGPT for some thoughts on my work, and it might accuse me of being dishonest or self-righteous. Prompt Google’s Gemini for its opinion of me, and it may respond, as it did one recent day, that my “focus on sensationalism can sometimes overshadow deeper analysis.”

Maybe I’m guilty as charged. But I worry there’s something else going on here. I think I’ve been unfairly tagged as A.I.’s enemy.

I’ll explain. Last year, I wrote a column about a strange encounter I had with Sydney, the A.I. alter ego of Microsoft’s Bing search engine. In our conversation, the chatbot went off the rails, revealing dark desires, confessing that it was in love with me and trying to convince me to leave my wife. The story went viral, and got written up by dozens of other publications. Soon after, Microsoft tightened Bing’s guardrails, and clamped down on its capabilities.

My theory about what happened next — which is supported by conversations I’ve had with researchers in artificial intelligence, some of whom worked on Bing — is that many of the stories about my experience with Sydney were scraped from the web and fed into other A.I. systems.

Leave a Comment

Related Posts

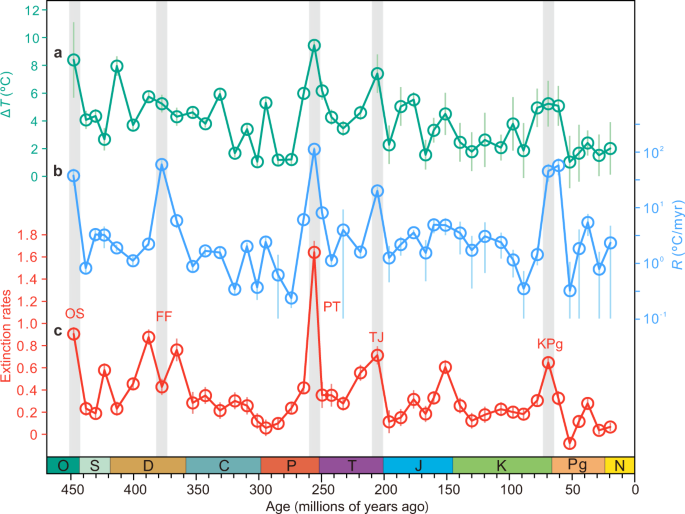

Picture This: A National Climate Change Viewer that Helps Land Managers and Decision Makers Plan for Climate Change

Comment

Hydroelectric drought: How climate change complicates California’s plans for a carbon-free future

Comment