Why I Built a Tool to Test AI's Command Line AX

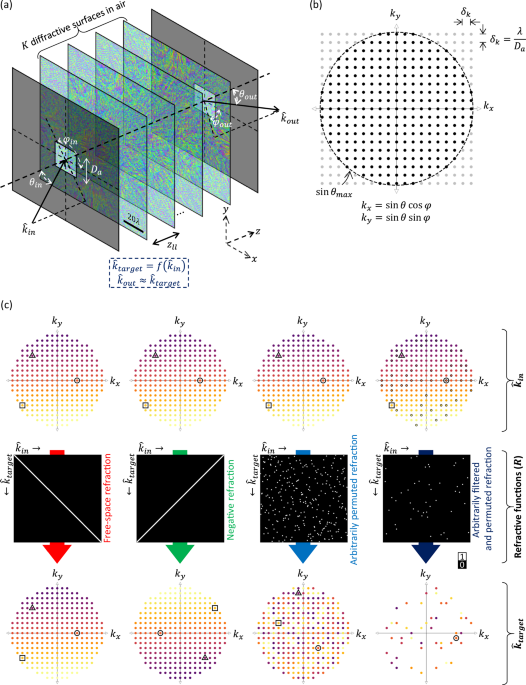

TL;DR >> Built AgentProbe to test how AI agents interact with CLI tools. Even simple commands like 'vercel deploy' show massive variance: 16-33 turns across runs, 40% success rate. The tool reveals specific friction points and grades CLI 'agent-friendliness' from A-F. Now available for Claude Code MAX subscribers. <<

This wasn’t a complex multi-step deployment. This was the simplest possible case. And it revealed something broken about how we’re building for the AI-native era.

I’ve pushed 50+ projects with AI agents in recent months. The pattern became undeniable: agents don’t fail because they’re dumb. They fail because our tools are hostile.

Watch Claude spiral for hours clicking an unclickable interface. Watch it misinterpret error messages written for humans who can read between lines. Watch it retry the same failing command because the output gives zero actionable feedback.

Each scenario gets an AX Score (Agent Experience Score). Just like school, but for how well your CLI plays with artificial intelligence.