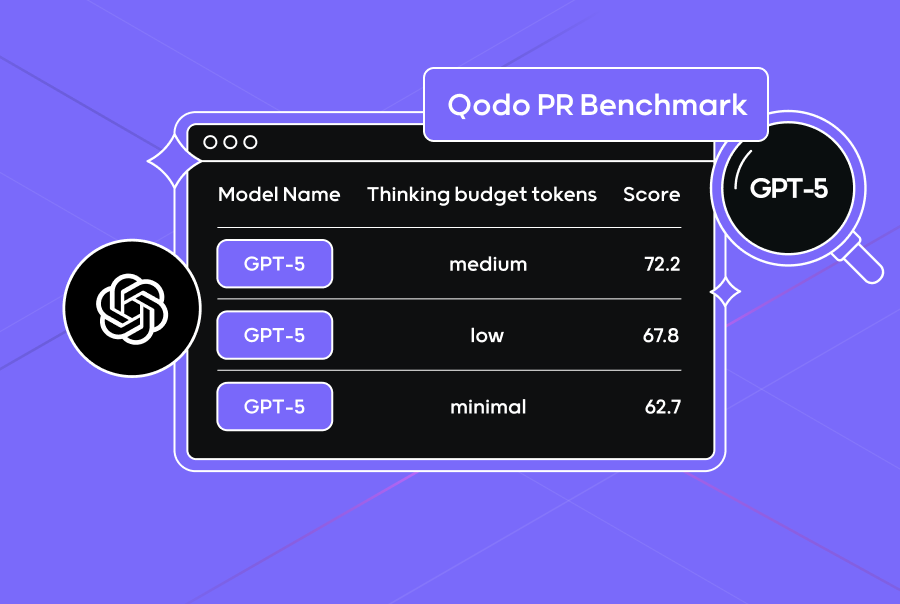

Benchmarking GPT-5 on Real-World Code Reviews with the PR Benchmark

Dedy Kredo August 07, 2025 4 min

At Qodo, we believe benchmarks should reflect how developers actually work. That’s why we built the PR Benchmark—a benchmark designed to assess how well language models handle tasks like code review, suggesting improvements, and understanding developer intent.

Unlike many public benchmarks, the PR Benchmark is private, and its data is not publicly released. This ensures models haven’t seen it during training, making results fairer and more indicative of real-world generalization.

We recently evaluated a wide range of top-tier models, including variants of the newly-released GPT-5, as well as Gemini 2.5, Claude Sonnet 4, Grok 4, and others. The results are promising across the board, and they offer a snapshot of how rapidly this space is evolving.

Qodo’s PR Benchmark is designed to evaluate how well LLMs perform core pull request review tasks. The PR Benchmark tests model performance across a dataset of 400 real-world PRs from over 100 public repositories, covering multiple languages, frameworks and styles.