Understanding Google’s Quantum Error Correction Breakthrough

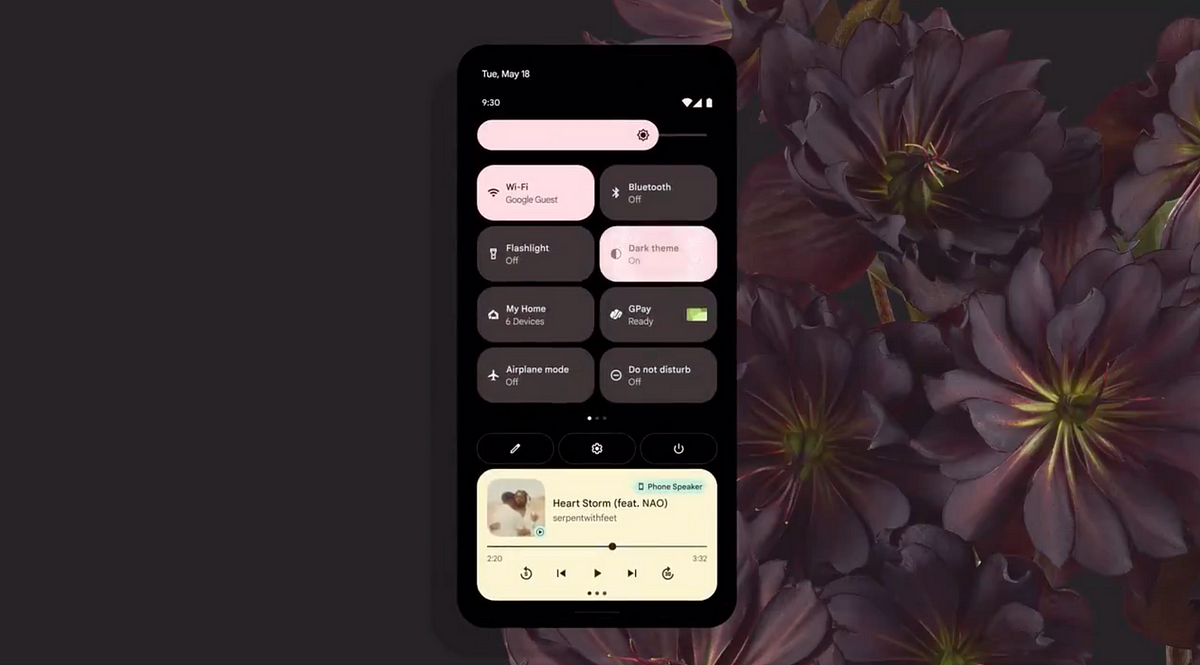

Imagine trying to balance thousands of spinning tops at the same time—each top representing a qubit, the fundamental building block of a quantum computer. Now imagine these tops are so sensitive that even a slight breeze, a tiny vibration, or a quick peek to see if they’re still spinning could make them wobble or fall. That’s the challenge of quantum computing: Qubits are incredibly fragile, and even the process of controlling or measuring them introduces errors.

This is where Quantum Error Correction (QEC) comes in. By combining multiple fragile physical qubits into a more robust logical qubit, QEC allows us to correct errors faster than they accumulate. The goal is to operate below a critical threshold—the point where adding more qubits reduces, rather than increases, errors. That’s precisely what Google Quantum AI has achieved with their recent breakthrough [1].

To grasp the significance of Google’s result, let’s first understand what success in error correction looks like. In classical computers, error-resistant memory is achieved by duplicating bits to detect and correct errors. A method called majority voting is often used, where multiple copies of a bit are compared, and the majority value is taken as the correct bit. In quantum systems, physical qubits are combined to create logical qubits, where errors are corrected by monitoring correlations among qubits instead of directly observing the qubits themselves. It involves redundancy like majority voting, but does not rely on observation but rather entanglement. This indirect approach is crucial because directly measuring a qubit’s state would disrupt its quantum properties. Effective quantum error correction maintains the integrity of logical qubits, even when some physical qubits experience errors, making it essential for scalable quantum computing.