Is AI hitting a wall? - by Rohit Krishnan

Of course, I can’t leave it at that. The reason the question comes up is that there have been a lot of statements that they are stalling a bit. Even Ilya has said that it is.

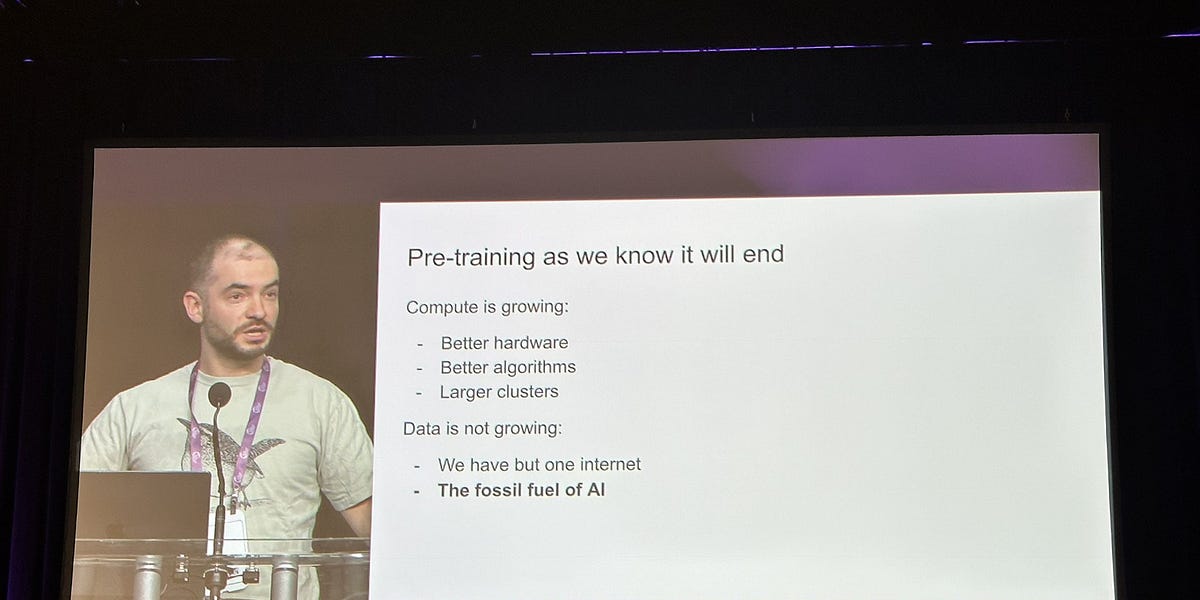

Ilya Sutskever, co-founder of AI labs Safe Superintelligence (SSI) and OpenAI, told Reuters recently that results from scaling up pre-training - the phase of training an AI model that use s a vast amount of unlabeled data to understand language patterns and structures - have plateaued.

Of course, he’s a competitor now to OpenAI, so maybe it makes sense to talk his book by hyping down compute as an overwhelming advantage. But still, the sentiment has been going around. Sundar Pichai thinks the low hanging fruit are gone. There’s whispers on why Orion from OpenAI was delayed and Claude 3.5 Opus is nowhere to be found.

Gary Marcus has claimed vindication. And even though that has happened before, a lot of folks are worried that this time he's actually right.