Simon Willison’s Weblog

There’s a whole lot of buzz around the new Qwen2.5-Coder Series of open source (Apache 2.0 licensed) LLM releases from Alibaba’s Qwen research team. On first impression it looks like the buzz is well deserved.

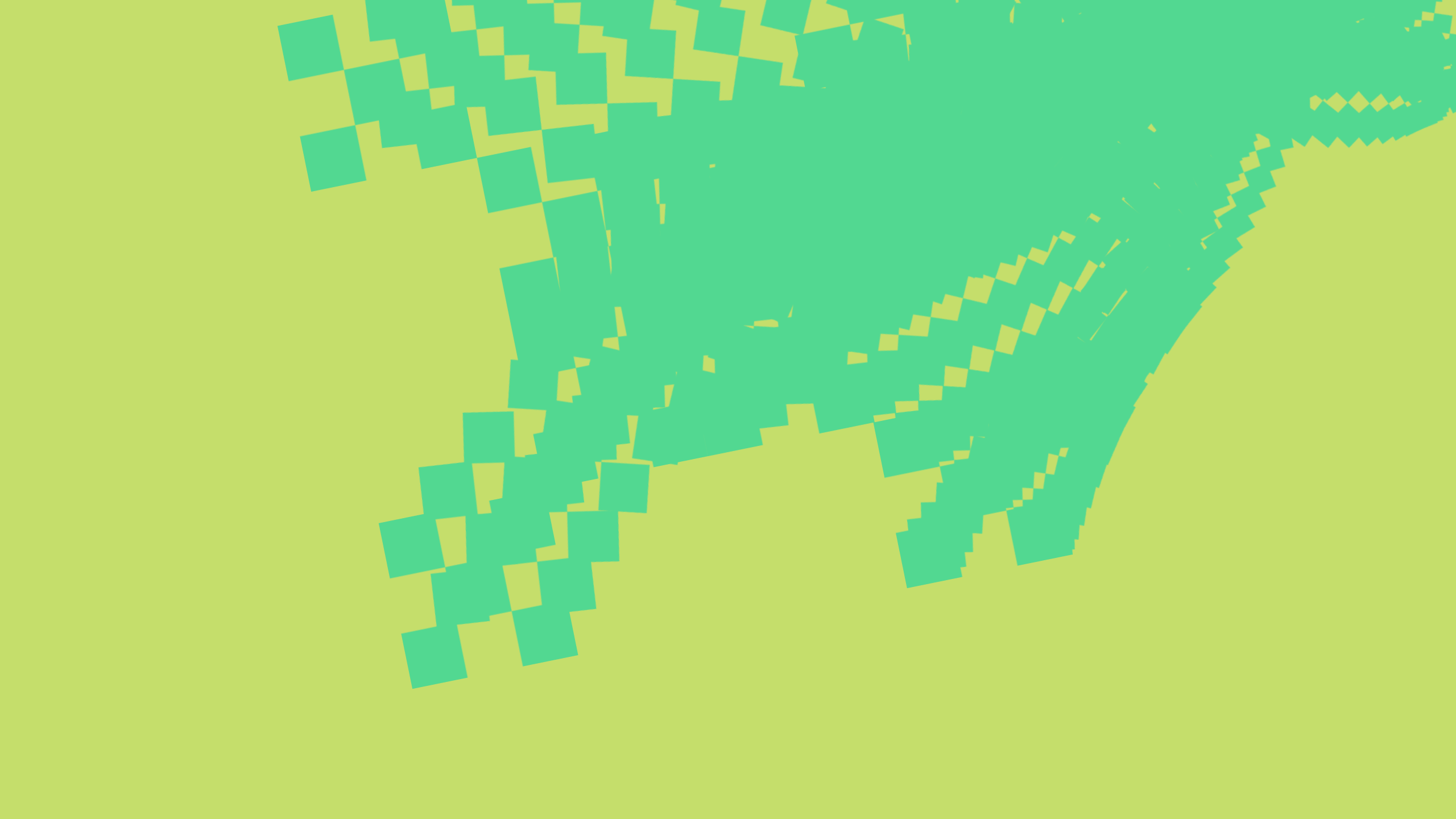

That’s a big claim for a 32B model that’s small enough that it can run on my 64GB MacBook Pro M2. The Qwen published scores look impressive, comparing favorably with GPT-4o and Claude 3.5 Sonnet (October 2024) edition across various code-related benchmarks:

The new Qwen 2.5 Coder models did very well on aider’s code editing benchmark. The 32B Instruct model scored in between GPT-4o and 3.5 Haiku.

That was for the Aider “whole edit” benchmark. The “diff” benchmark scores well too, with Qwen2.5 Coder 32B tying with GPT-4o (but a little behind Claude 3.5 Haiku).

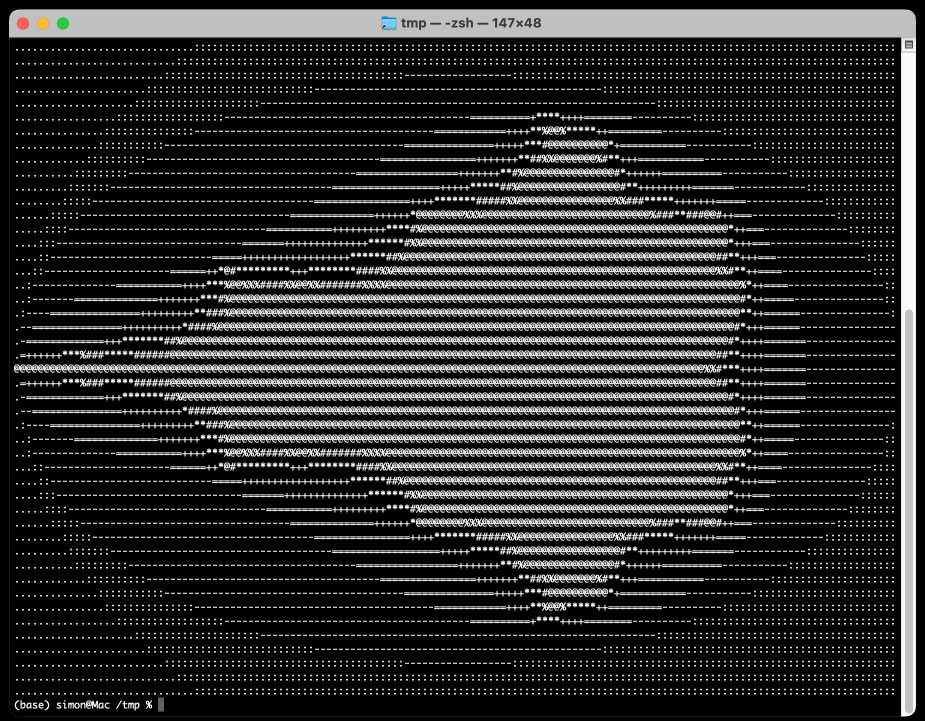

My attempts to run the Qwen/Qwen2.5-Coder-32B-Instruct-GGUF Q8 using llm-gguf were a bit too slow, because I don’t have that compiled to use my Mac’s GPU at the moment.