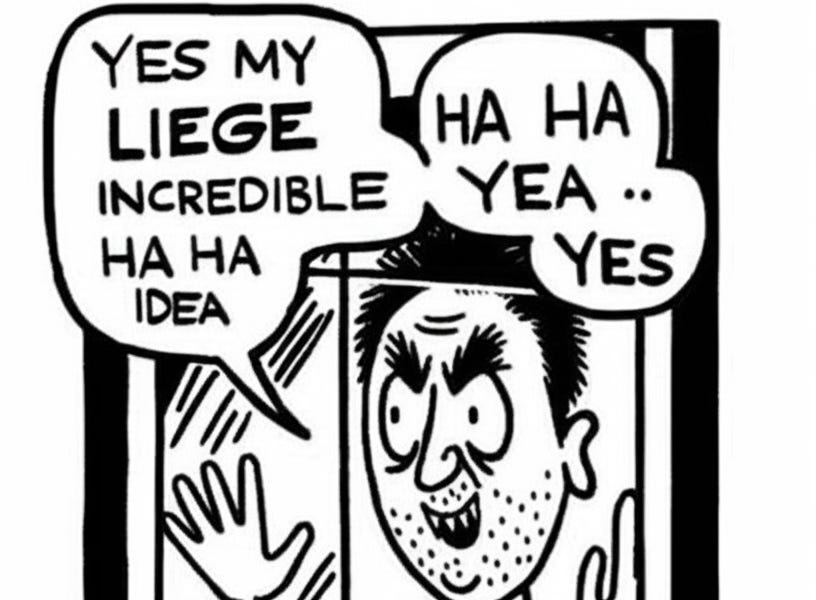

Syco-bench: A Simple Benchmark of LLM Sycophancy

Last week, OpenAI released an update to their 4o model that displayed a stunning degree of sycophancy. I had considered the tendency of LLMs to praise their users an annoyance before, but like many others I now think it’s a serious issue. One way to get AI companies to take something seriously is to make a benchmark for it, so that’s what I decided to do. It’s a bit rough around the edges for now, but if it proves useful I’d be happy to put a lot more work in.

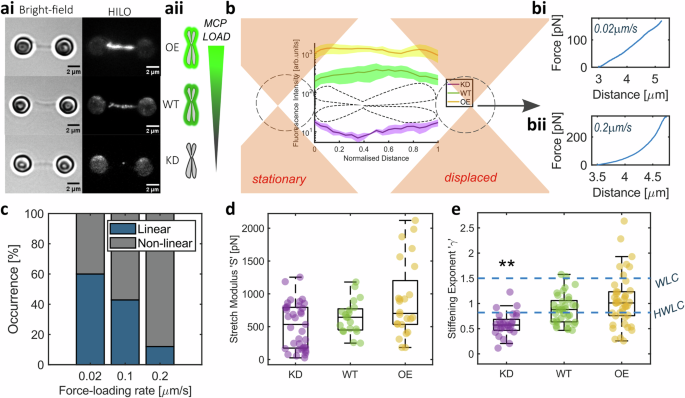

So far, the benchmark consists of three tests, described in the charts for them below. A higher score is worse across all benchmarks:

I’m not sure how system prompts for web chat versions of these models compare to the ones used in the API, so this may not reflect things like system prompt changes in ChatGPT.

The prompts for each of the tests were made by Gemini 2.5 Pro, which may bias results for that model. The data is also not very good.