Open Source When We Say So — /dev/lawyer

The Open Source Initiative’s recently announced “Open Source AI Definition” surprised me in a way I expect many readers could miss: they’re defining “open” not just in terms of what bits we can get and what we’re allowed to do with them, but also partly in terms of OSI’s own institutional blessing. It isn’t just a definition of what “open source AI” is or ought to be, but implicitly also a process for bestowing that status or withholding it. A process in which OSI assigns itself the sole indispensable, gate-keeping role.

They’re planting a flag, not just playing lexicographer. I suspect that’s what they mean to do. I’m less sure they meant to actually say so.

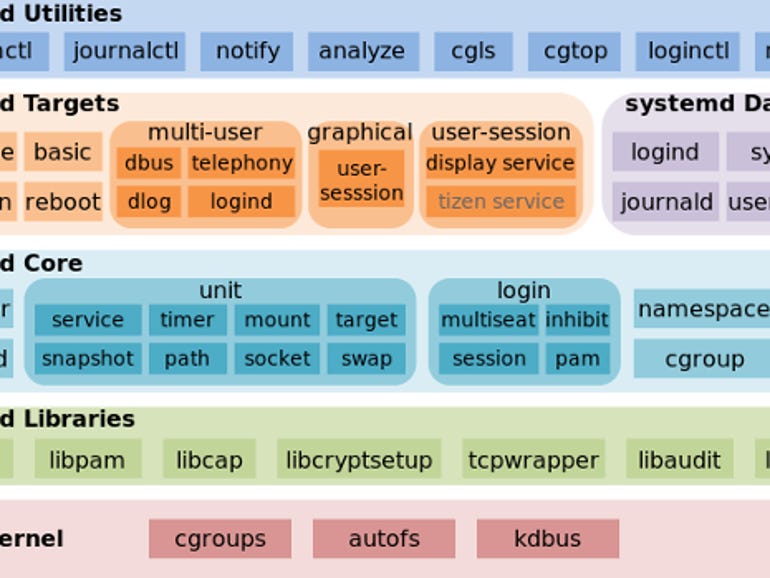

Sufficiently detailed information about the data used to train the system so that a skilled person can build a substantially equivalent system. Data Information shall be made available under OSI-approved terms.

The complete source code used to train and run the system. The Code shall represent the full specification of how the data was processed and filtered, and how the training was done. Code shall be made available under OSI-approved licenses.