Finally, a Replacement for BERT: Introducing ModernBERT

Benjamin Warner, Antoine Chaffin, Benjamin Clavié, Orion Weller, Oskar Hallström, Said Taghadouini, Alexis Gallagher, Raja Biswas, Faisal Ladhak, Tom Aarsen, Nathan Cooper, Griffin Adams, Johno Whitaker, Jeremy Howard, Iacopo Poli

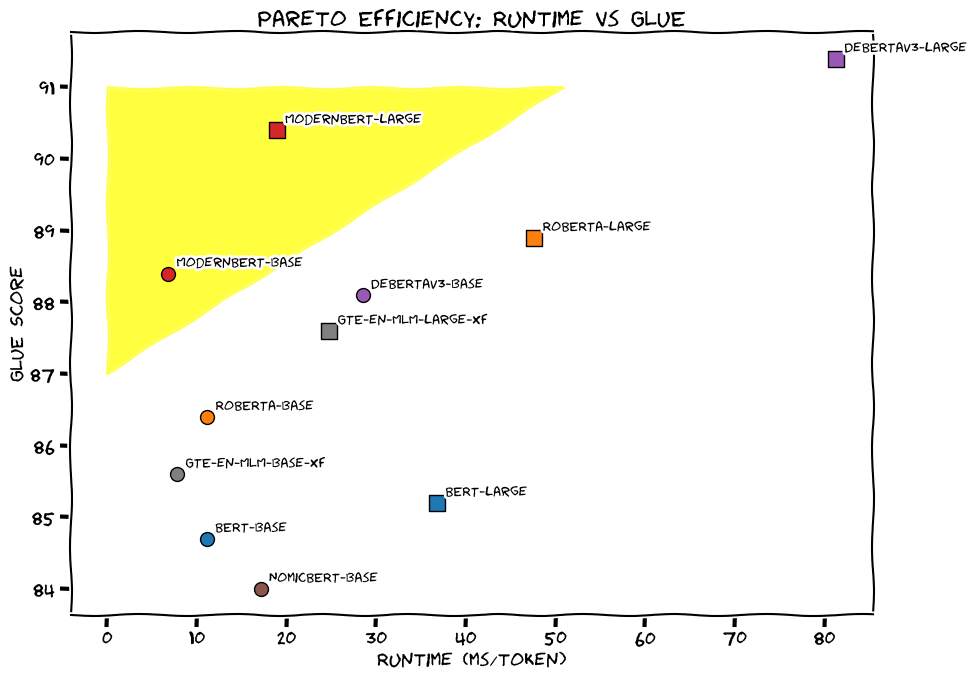

This blog post introduces ModernBERT, a family of state-of-the-art encoder-only models representing improvements over older generation encoders across the board, with a 8192 sequence length, better downstream performance and much faster processing.

ModernBERT is available as a slot-in replacement for any BERT-like models, with both a base (139M params) and large (395M params) model size.

Since ModernBERT is a Masked Language Model (MLM), you can use the fill-mask pipeline or load it via AutoModelForMaskedLM. To use ModernBERT for downstream tasks like classification, retrieval, or QA, fine-tune it following standard BERT fine-tuning recipes. ⚠️ If your GPU supports it, we recommend using ModernBERT with Flash Attention 2 to reach the highest efficiency. To do so, install Flash Attention as follows, then use the model as normal:

BERT was released in 2018 (millennia ago in AI-years!) and yet it’s still widely used today: in fact, it’s currently the second most downloaded model on the HuggingFace hub, with more than 68 million monthly downloads, only second to another encoder model fine-tuned for retrieval. That’s because its encoder-only architecture makes it ideal for the kinds of real-world problems that come up every day, like retrieval (such as for RAG), classification (such as content moderation), and entity extraction (such as for privacy and regulatory compliance).