Creating 3D Line Drawings

This is an experiment examing how to create a 3D line drawing of a scene. In this post, I will describe how this can be done by augmenting the process of generating 3D Gaussian Splats 3D Gaussian Splatting for Real-Time Radiance Field Rendering, by Kerbl et al. and leveraging a process to transform photographs into Informative Line Drawings Learning to Generate Line Drawings that Convey Geometry and Semantics, by Chan, Isola & Durand .

The majority of scenes shown above are generated using a contour style. You can switch the active scene using the menu in the top-right corner of the iframe. Each scene is trained for 21,000 iterations on an Nvidia RTX 4080S, using gaussian-splatting-cuda from MrNerf with default settings. Examples here were generated using scenes from the Tanks & Temples Benchmark. The scene is interactive, and rendered using Mark Kellogg's web-based renderer. To explore these scenes in fullscreen, click here.

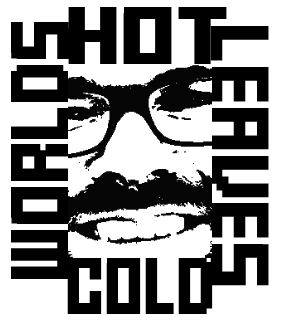

Images are transformed into line drawings using the approach introduced by Chan et al. in Learning to Generate Line Drawings that Convey Geometry and Semantics. They describe a process for transforming photographs into line drawings that preserve the semantics and geometry captured in the photograph while rendering the image in an artistic style. They do this by training a generative adversarial network (GAN) that minimizes geometry The geometry loss is computed using monocular depth estimation. , semantics The semantics loss is computed using CLIP embeddings. , and appearance The appearance loss is based on a collection of unpaired style references. losses. Their work is fantastic and I recommend reading their paper if you're interested in the details. Figure 1 depicts the input photograph and output line drawing in two styles, generated using Chan et al.'s code and model weights.

:max_bytes(150000):strip_icc()/GettyImages-1153587320-0631e4b801ca4f54899ca1fd26350a28.jpg)