How AI Understands Us: The Secret World of Embeddings

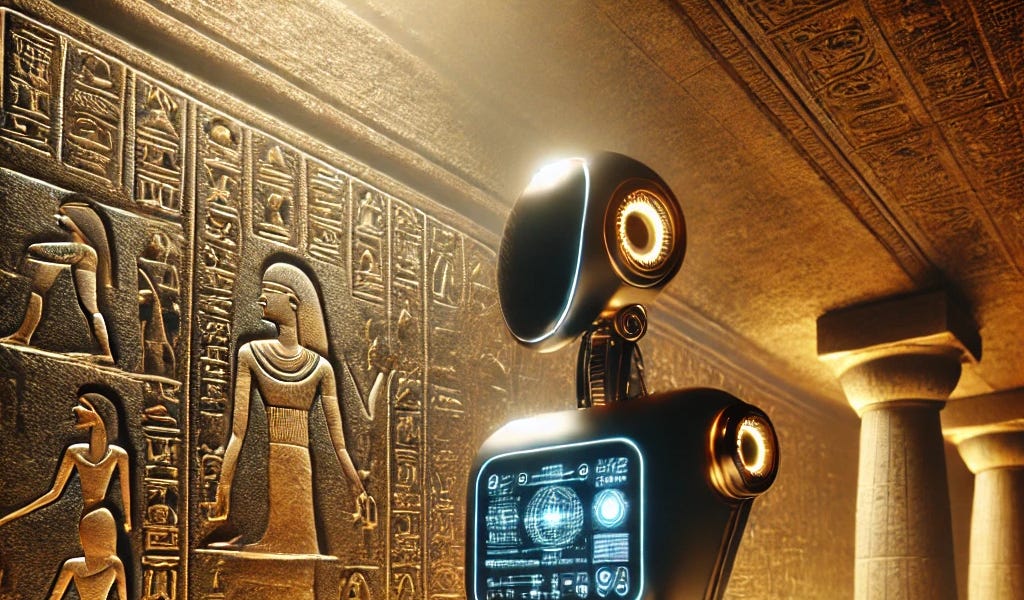

Picture an ancient manuscript full of strange symbols. You know they hold meaning, but without a key to decode them, their secrets remain hidden. For years, this was how computers "saw" human language—as strings of unrelated symbols, impossible to comprehend.

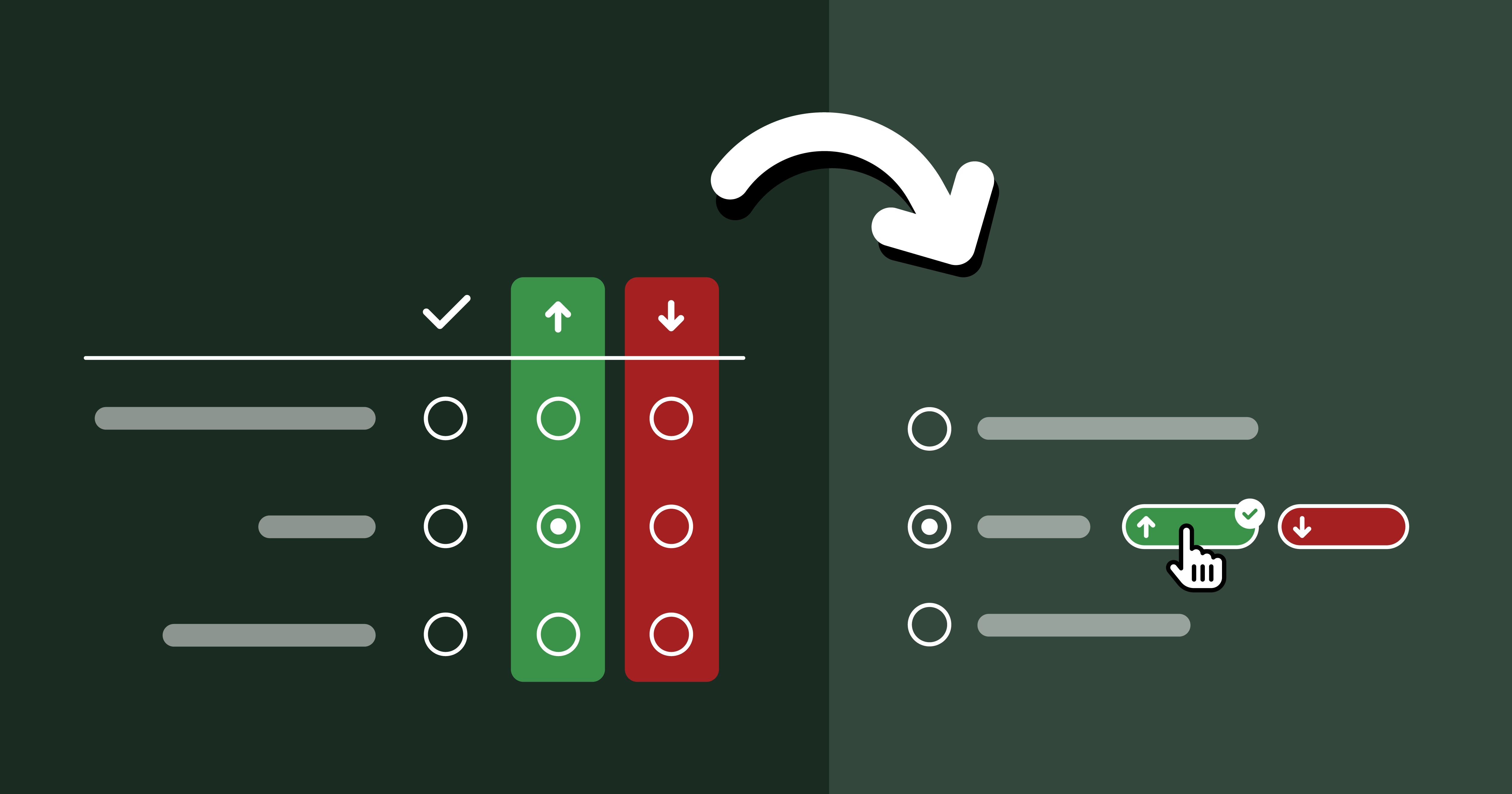

Now, imagine assigning coordinates to each of those symbols, grouping similar ones together to reveal connections. This is the magic of embeddings: a way for artificial intelligence to understand language through math. But before we unpack this fascinating concept, let's see why it became necessary.

Computers are good at working with numbers, but words pose a different challenge. When you read "cat," you might think of soft fur or a playful pet. For a computer, "cat" is just three letters: c, a, and t. It doesn’t understand how "cat" relates to "kitten" or "feline."

This gap made processing language difficult. Early solutions involved building dictionaries to connect related words. While this worked for simple cases, it couldn’t handle language’s complexity. Words like "bright" and "cold" have meanings that change with context, creating endless challenges.