How We Generated Millions of Content Annotations

With the fields of machine learning (ML) and generative AI (GenAI) continuing to rapidly evolve and expand, it has become increasingly important for innovators in this field to anchor their model development on high-quality data.

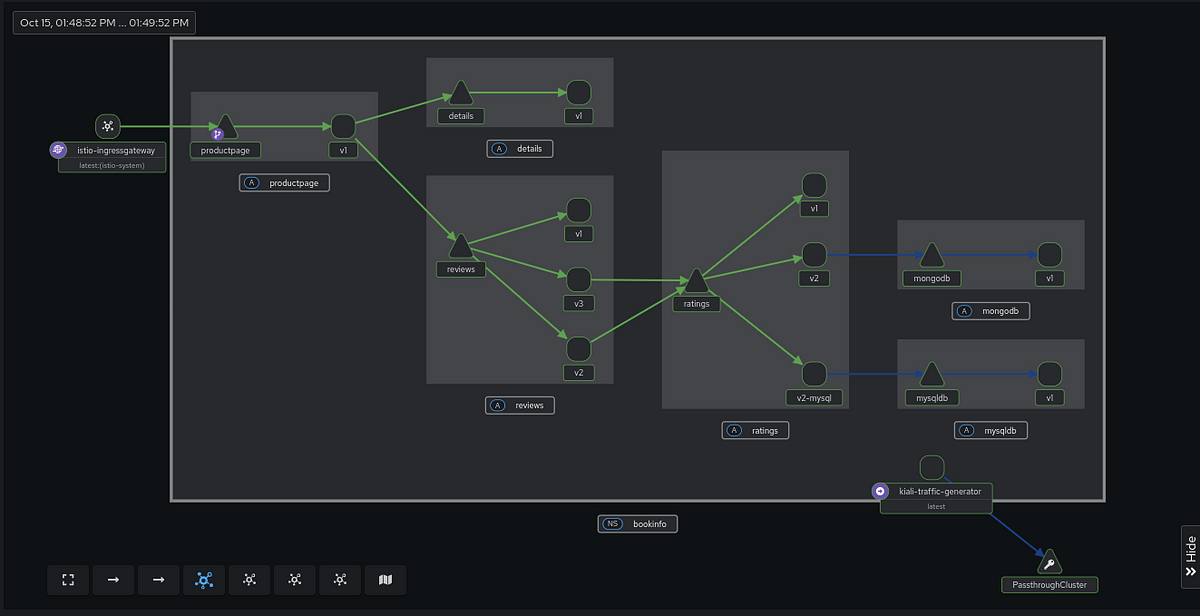

As one of the foundational teams at Spotify focused on understanding and enriching the core content in our catalogs, we leverage ML in many of our products. For example, we use ML to detect content relations so a new track or album will be automatically placed on the right Artist Page. We also use it to analyze podcast audio, video, and metadata to identify platform policy violations. To power such experiences, we need to build several ML models that cover entire content catalogs — hundreds of millions of tracks and podcast episodes. To implement ML at this scale, we needed a strategy to collect high-quality annotations to train and evaluate our models. We wanted to improve the data collection process to be more efficient and connected and to include the right context for engineers and domain experts to operate more effectively.

To address this, we had to evaluate the end-to-end workflow. We took a straightforward ML classification project, identified the manual steps to generate annotations, and aimed to automate them. We developed scripts to sample predictions, served data for operator review, and integrated the results with model training and evaluation workflows. We increased the corpus of annotations by 10 times and did so with three times the improvement in annotator productivity.