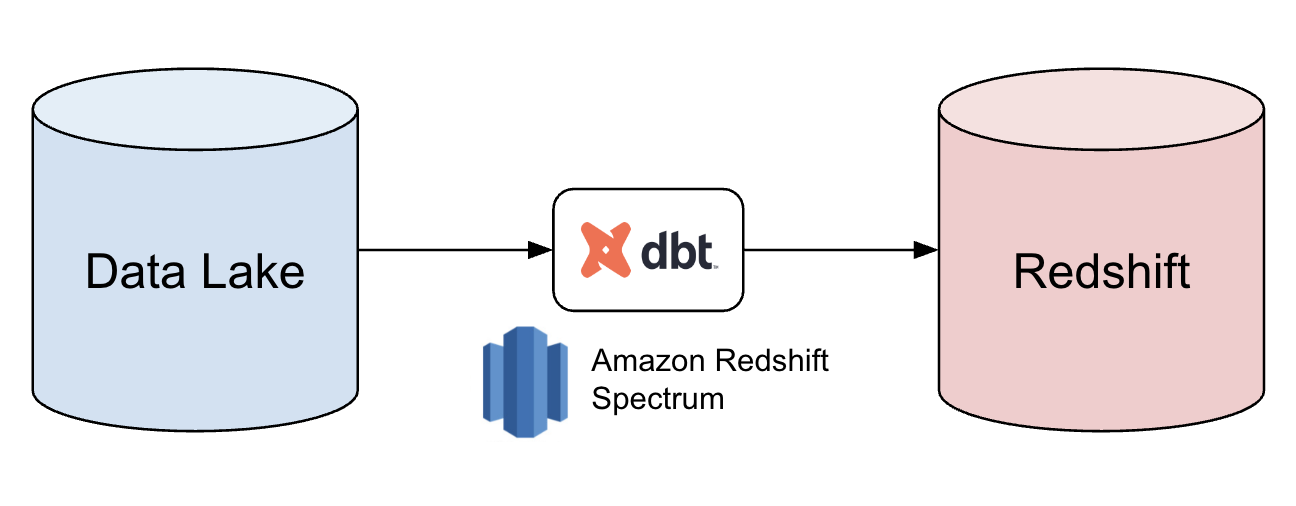

Loading data into Redshift with DBT

At Yelp, we embrace innovation and thrive on exploring new possibilities. With our consumers’ ever growing appetite for data, we recently revisited how we could load data into Redshift more efficiently. In this blog post, we explore how DBT can be used seamlessly with Redshift Spectrum to read data from Data Lake into Redshift to significantly reduce runtime, resolve data quality issues, and improve developer productivity.

Our method of loading batch data into Redshift had been effective for years, but we continually sought improvements. We primarily used Spark jobs to read S3 data and publish it to our in-house Kafka-based Data Pipeline (which you can read more about here) to get data into both Data Lake and Redshift. However, we began encountering a few pain points:

When considering how to move data around more efficiently, we chose to leverage AWS Redshift Spectrum, a tool built specifically to make it possible to query Data Lake data from Redshift. Since Data Lake tables usually had the most updated schemas, we decided to use it as the data source instead of S3 for our Redshift batches. Not only did it help reduce data divergence, it also aligned with our best practice of treating the Data Lake as the single source of truth.