A history of NVidia Stream Multiprocessor

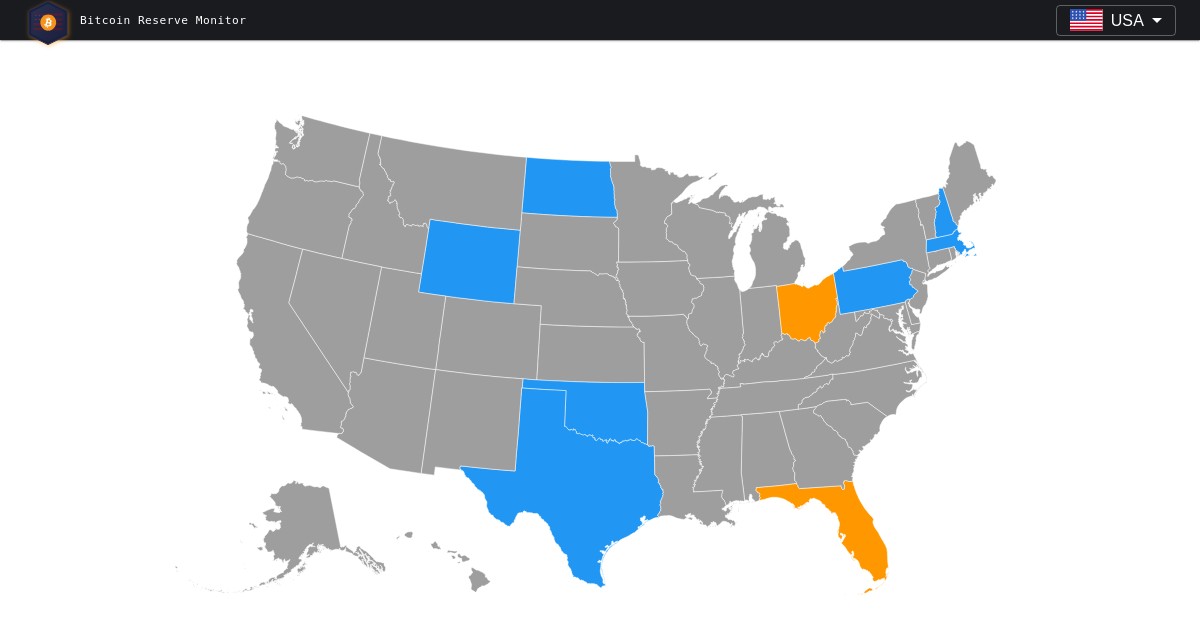

I spent last week-end getting accustomed to CUDA and SIMT programming. It was a prolific time ending up with a Business Card Raytracer running close to 700x faster[1], from 101s to 150ms. This pleasant experience was a good pretext to spend more time on the topic and learn about the evolution of Nvidia architecture. Thanks to the abundant documentation published over the years by the green team, I was able to go back in time and fast forward though the fascinating evolution of their stream multiprocessors. Visited in this article: Year Arch Series Die Process Enthusiast Card =========================================================================== 2006 Tesla GeForce 8 G80 90 nm 8800 GTX 2010 Fermi GeForce 400 GF100 40 nm GTX 480 2012 Kepler GeForce 600 GK104 28 nm GTX 680 2014 Maxwell GeForce 900 GM204 28 nm GTX 980 Ti 2016 Pascal GeForce 10 GP102 16 nm GTX 1080 Ti 2018 Turing GeForce 20 TU102 12 nm RTX 2080 Ti

Up to 2006, NVidia's GPU design was correlated to the logical stages in the rendering API[2]. The GeForce 7900 GTX, powered by a G71 die is made of three sections dedicated to vertex processing (8 units), fragment generation (24 units), and fragment merging (16 units). The G71. Notice the Z-Cull optimization discarding fragment that would fail the Z test. This correlation forced designers to guess the location of bottlenecks in order to properly balance each layers. With the emergence of yet another stage in DirectX 10, the geometry shader, Nvidia engineers found themselves faced with the difficult task of balancing a die without knowing how much a stage was going to be adopted. It was time for a change.