Search code, repositories, users, issues, pull requests...

This code is written for modern PyTorch (version 2.7 or newer) using DTensor-based parallelism. This includes FSDP2 with fully_shardand tensor parallelism (TP) with parallelize_module. Support for other distributed training APIs is not guaranteed.

To enable more advanced distributed strategies such as Fully Sharded Data Parallel (FSDP) and Tensor Parallelism (TP), you can specify the configuration in the dion_160m.yaml file:

Alternatively, you can override these values directly from the command line. All three values must be explicitly given, but a size may be set to 1 to omit a parallelism dimension.

Optimization algorithms are essential to training neural networks, converting gradients into model weight updates to minimize loss. For many years, the state-of-the-art method has been Adam/AdamW. However, recent work has shown that orthonormalized matrix updates can significantly accelerate model convergence. See Bernstein and Newhouse, 2025 for a theoretical justification.

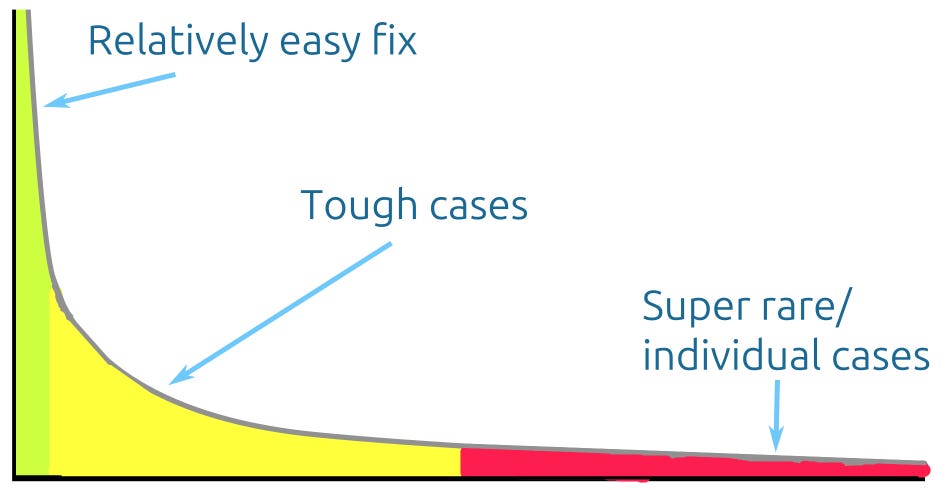

The practical effectiveness of orthonormal updates was first demonstrated by Muon in the NanoGPT speedrun, and has since been validated at scale by models such as Kimi K2 and GLM-4.5. Muon implements orthonormalization via Newton-Schulz iterations, which relies on repeated matrix-matrix multiplications. However, large-scale training relies on model sharding, where weight matrices and optimizer states are distributed across multiple processes. As discussed by Essential AI, orthonormalizing a sharded matrix with Newton-Schulz iterations involves the communication-intensive procedure of reconstructing the full matrices from their individual shards.