FastWan: Generating a 5-Second Video in 5 Seconds via Sparse Distillation

TL;DR: We introduce FastWan, a family of video generation models trained via a new recipe we term as “sparse distillation”. Powered by FastVideo, FastWan2.1-1.3B end2end generates a 5-second 480P video in 5 seconds (denoising time 1 second) on a single H200 and 21 seconds (denoising time 2.8 seconds) on a single RTX 4090. FastWan2.2-5B generates a 5-second 720P video in 16 seconds on a single H200. All resources — model weights, training recipe, and dataset — are released under the Apache-2.0 license.

FastWan is runnable on a wide range of hardware including Nvidia H100, H200, 4090, and Apple Sicicon. Check out FastVideo to get started.

For FastWan2.2-TI2V-5B-FullAttn, since its sequence length is short (~20K), it does not benifit much from sparse attention. We only train it with DMD and full attention. We are actively working on applying sparse distillation to 14B models for both Wan2.1 and Wan2.2. Follow our progress at our Github, Slack and Discord!

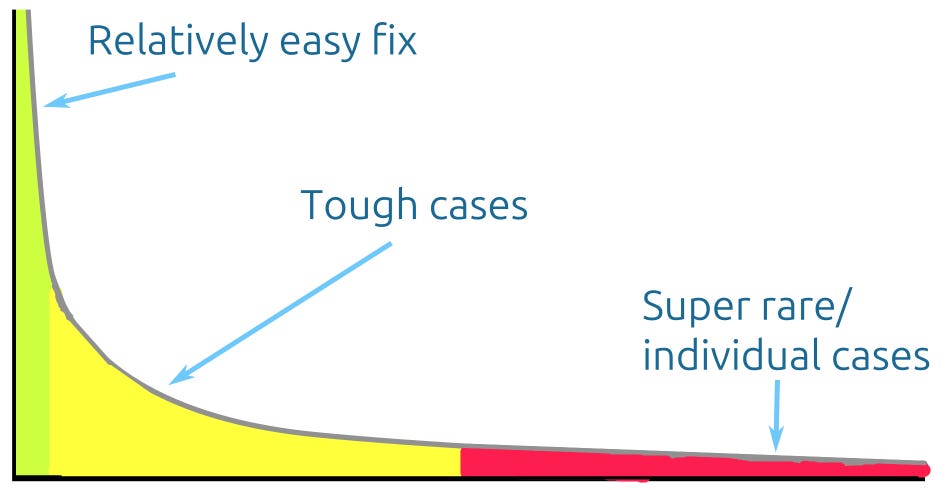

Sparse distillation is our core innovation in FastWan — the first method to jointly train sparse attention and denoising step distillation in a unified framework. At its heart, sparse distillation answers a fundamental question: Can we retain the speedups from sparse attention while applying extreme diffusion compression (e.g., 3 steps instead of 50)? Prior work says no — and in the following sections we show why that answer changes with Video Sparse Attention (VSA).