Understanding Delta Lake's consistency model

A few days ago I released my analysis of Apache Hudi’s consistency model, with the help of a TLA+ specification. This post will do the same for Delta Lake. Just like the Hudi post, I will not comment on subjects such as performance, efficiency or how use cases such as batch and streaming are supported. This post focuses solely on the consistency model using a logical model of the core Delta Lake protocol .

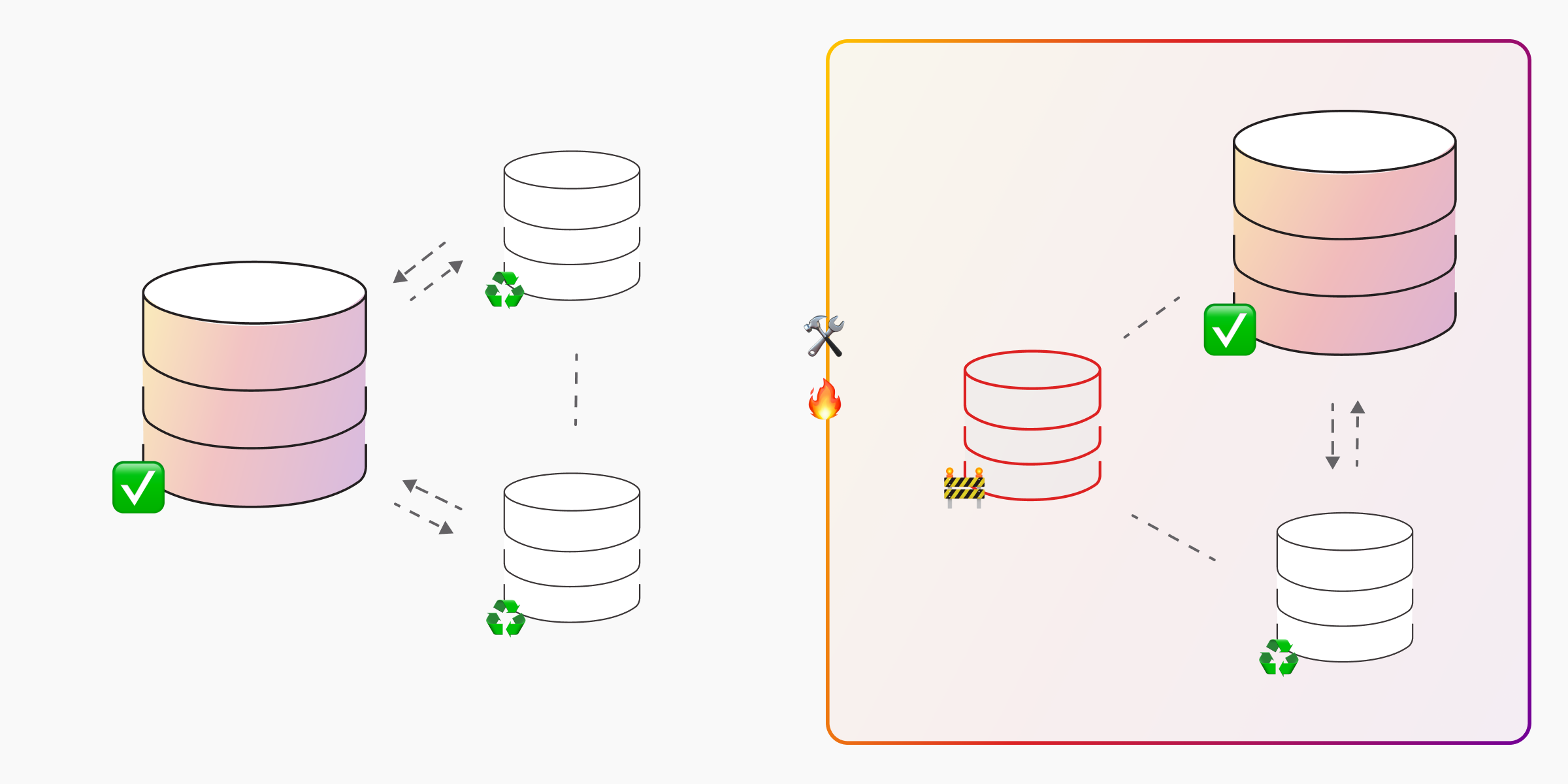

Delta Lake is one of the big three table formats that provide a table abstraction over object storage. Like Apache Hudi, it consists of a write-ahead-log, and a set of data files (usually parquet). The log in Delta Lake is called the delta log.

The delta log is a write-ahead-log that consists of metadata about the transactions that have occurred, including the data files that were added and removed in each transaction. Using this transaction log, a reader or writer can build up a snapshot of all the data files that make up the table at a given version. If a data file is not referenced from the delta log, then it is unreadable. The very basic idea behind how Delta Lake read and write operations are:

Writers write data files (Parquet usually) and commit those files by writing a log entry to the delta log that includes the files it added and logically removed.