Monosemanticity at Home: My Attempt at Replicating Anthropic's Interpretability Research from Scratch

Sometime near the end of last year, I came across a blog post by Scott Alexander giving an overview of Anthropic’s recent work on language model interpretability. The post is entitled “God Help Us, Let's Try To Understand AI Monosemanticity” which is highly provocative and slightly alarming, especially given the wild acceleration that AI capabilities research has experienced as of late. Scott wants God’s help both because Anthropic’s research is kind of dense and seemingly unapproachable at first glance, and also because of the apparent dire need to understand what these models are actually doing. After reading through Scott’s post and Anthropic’s publication, however, I became less alarmed and more excited — the core of the research is actually pretty straightforward, but the findings are fascinating. If you’re not familiar with the research, I’d highly recommend reading Scott’s post, but here’s the gist:

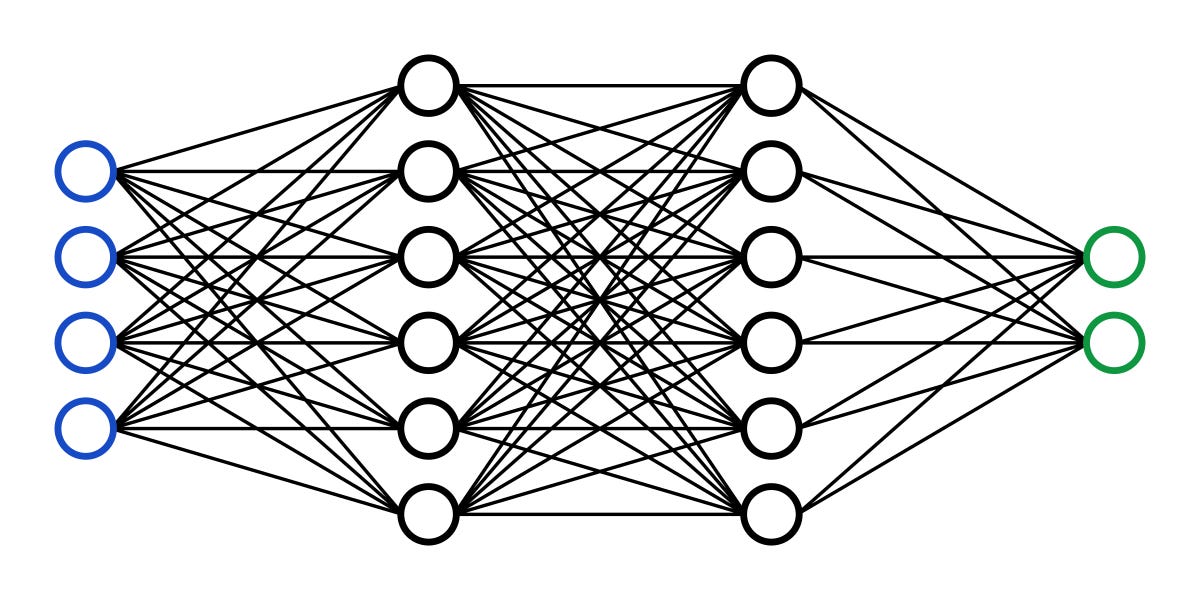

Large language models like ChatGPT (or Anthropic’s Claude, or Google’s Gemini) are extremely powerful and clearly employ some kind of complex, learned logic which we’d like to understand. One way to do this is to look at the atomic components of these models, called artificial neurons, and try to discern a function for each one. If we do this naively, however, we find that single neurons actually tend to correspond to multiple functions— this is called “superposition”. For example, the authors find a single neuron which “responds to a mixture of academic citations, English dialogue, HTTP requests, and Korean text”. This is what they call “polysemanticity”, and makes interpreting the model almost impossible. The researchers propose a technique using sparse dictionary learning which decomposes these neurons into “features” — linear combinations of neurons which together exhibit “monosemanticity” — which are individually interpretable (and thus allow a first look into how these models are working).

/cdn.vox-cdn.com/uploads/chorus_asset/file/25330660/STK414_AI_CHATBOT_H.jpg)