Data Pipeline Optimizations: Implementing a View Caching Layer in an ELT Platform

ActionIQ’s ELT Pipelines run over 15 thousand Spark jobs every day to load and prepare our customers’ data for querying. For our customers to have the most up-to-date data to power their customer experiences, it’s critical that our pipelines are reliable and always produce correct results. But materializing hundreds of terabytes of data every day is expensive—over 70% of our AWS EC2 compute bill for our Spark clusters comes from ingest pipeline usage. Since ActionIQ’s pricing is based on compute usage, neither us nor our customers benefit from inefficiencies in the pipelines.

In this post, we’ll explore a new view caching strategy we implemented to significantly reduce the compute usage of ActionIQ’s ELT pipelines.

ActionIQ’s customer data platform lets enterprise brands connect all their customer data together — either by ingesting datasets into the platform or by querying their data warehouse directly — and gives marketers easy and secure ways to activate data anywhere in the customer experience.

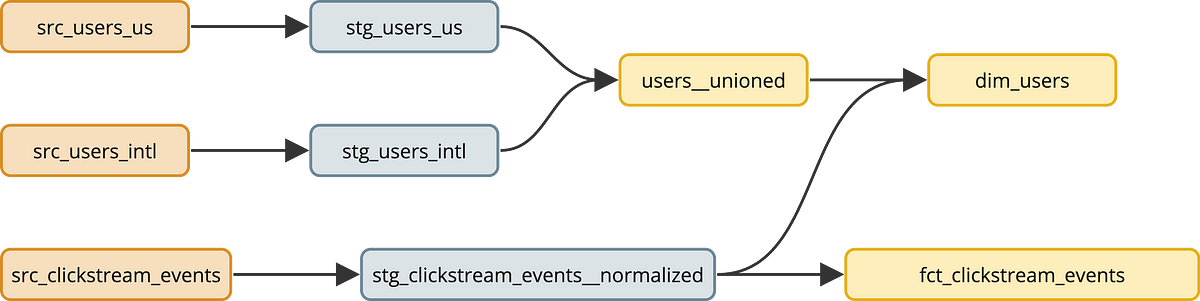

ActionIQ’s Pipelines product allows users to create scalable ELT pipelines to prepare raw customer data for marketing use cases. After ingesting raw customer data from source systems and normalizing to our internal table format, the transformation step in the pipeline allows our customers to define SQL-based views on top of their raw source data to create a cleansed and comprehensive 360 view of their customers. They can also create views based on other views to keep transformation logic modular and reusable. We materialize these views at ingest-time to improve downstream performance when users run queries for insights and when we export data to downstream channels.