Efficient finetuning of Llama 3 with FSDP QDoRA

This introductory note is from Answer.AI co-founder Jeremy Howard. The remainder of the article after this section is by Kerem Turgutlu and the Answer.AI team.

A few days ago, our expectations were realized when Meta announced their Llama 3 models. The largest will have over 400 billion parameters and, although training isn’t finished, it is already matching OpenAI and Anthropic’s best LLMs. Meta also announced that they have continuously pre-trained their models at far larger scales than we’ve seen before, using millions of carefully curated documents, showing greatly improved capability from this process.

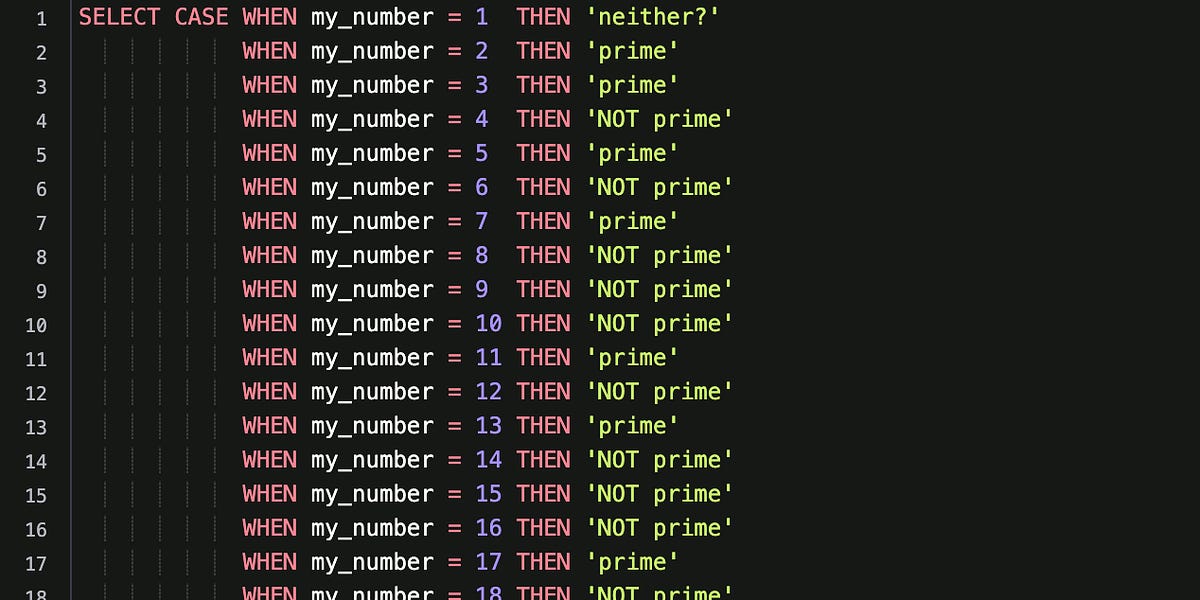

From the day we launched the company, we’ve been working on the technologies necessary to harness these two trends. Last month, we completed the first step, releasing FSDP/QLoRA, which for the first time allowed large 70b models to be finetuned on gaming GPUs. This helps a lot with handling larger models.

Today we’re releasing the next step: QDoRA. This is just as memory efficient and scalable as FSDP/QLoRA, and critically is also as accurate for continued pre-training as full weight training. We think that this is likely to be the best way for most people to train1 language models. We’ve ran preliminary experiments on Llama 2, and completed some initial ones on Llama 3. The results are extremely promising.