The Stanford AI Lab Blog

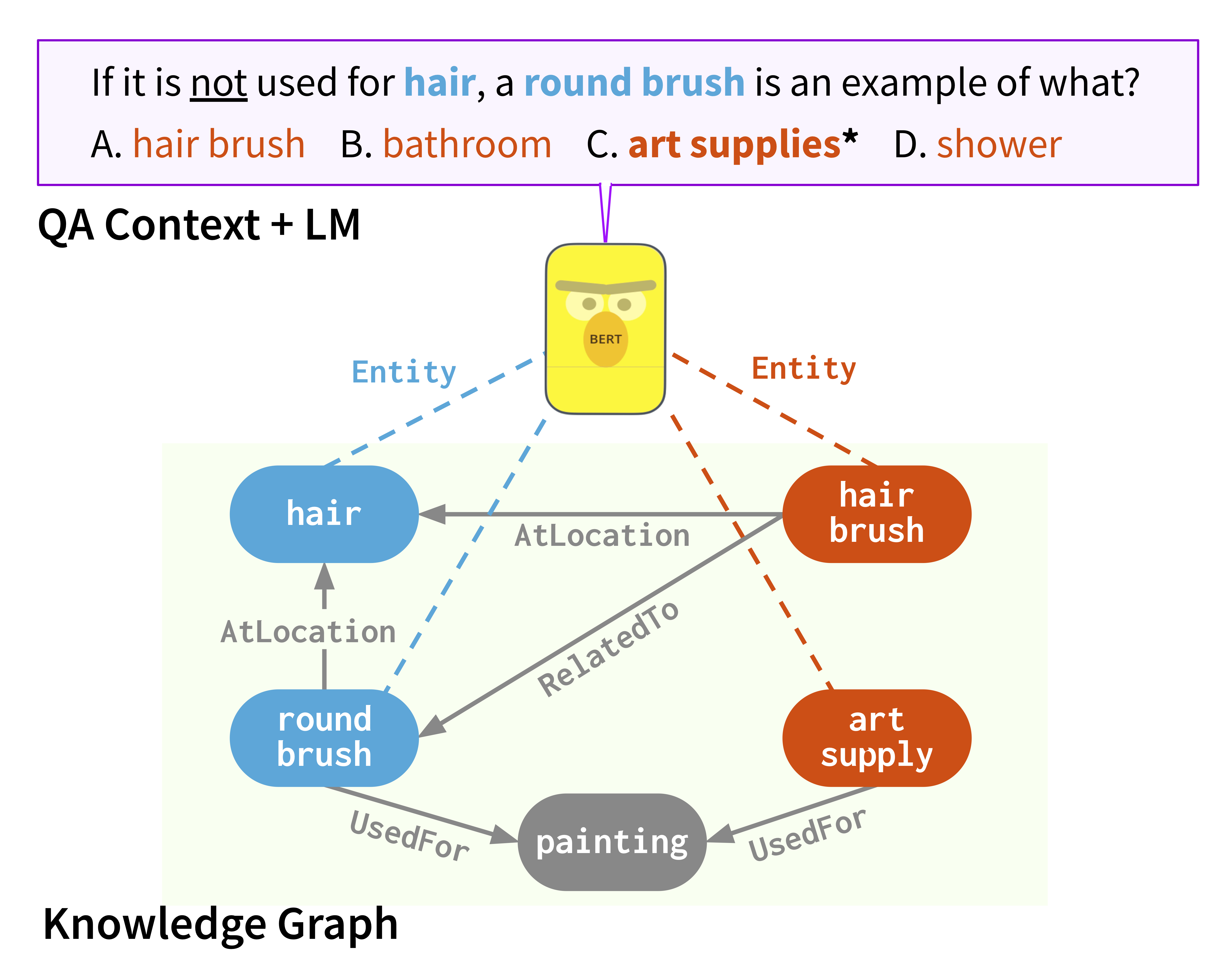

From search engines to personal assistants, we use question-answering systems every day. When we ask a question (“Where was the painter of the Mona Lisa born?”), the system needs to gather background knowledge (“The Mona Lisa was painted by Leonardo da Vinci”, “Leonardo da Vinci was born in Italy”) and reason over it to produce the answer (“Italy”).

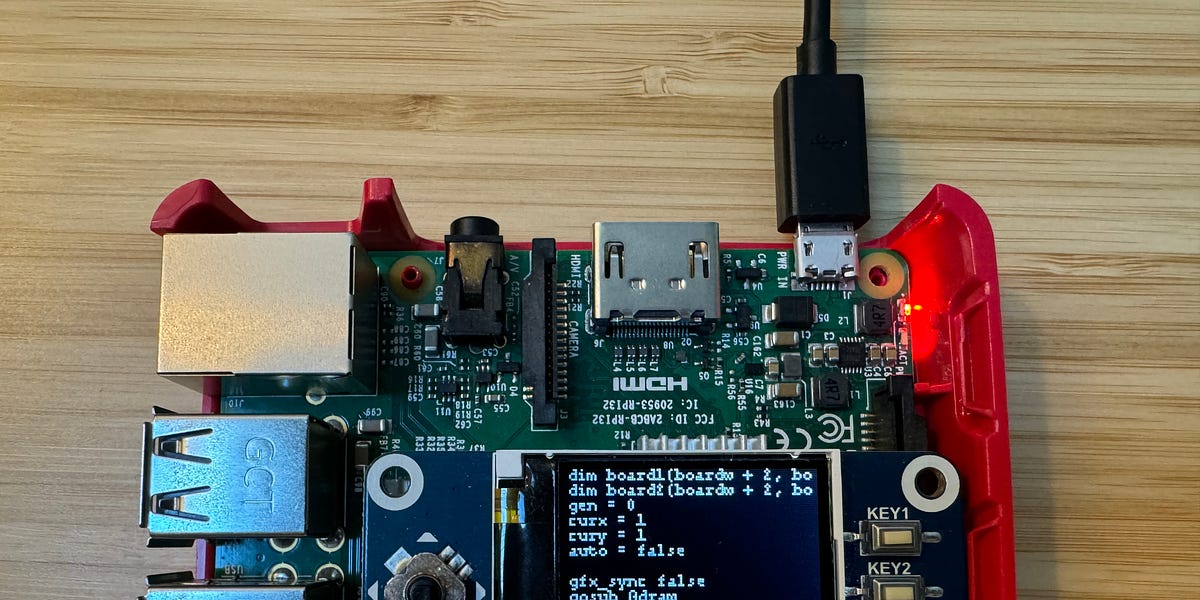

Knowledge sources In recent AI research, such background knowledge is commonly available in the forms of knowledge graphs (KGs) and language models (LMs) pre-trained on a large set of documents. In KGs, entities are represented as nodes and relations between them as edges, e.g. [Leonardo da Vinci — born in — Italy]. Examples of KGs include Freebase (general-purpose facts)1, ConceptNet (commonsense)2, and UMLS (biomedical facts)3. Examples of pre-trained LMs include BERT (trained on Wikipedia articles and 10,000 books)4, RoBERTa (extending BERT)5, BioBERT (trained on biomedical publications)6, and GPT-3 (the largest public LM to date)7.

The two knowledge sources have complementary strengths. LMs can be pre-trained on any unstructured text and thus have a broad coverage of knowledge. On the other hand, KGs are more structured and help for logical reasoning by providing paths between entities. KGs also include knowledge that may not be commonly stated in text: for instance, people do not often state obvious facts like “people breathe” and compositional sentences like “The birthplace of the painter of the Mona Lisa is Italy”.