Out with Golden Datasets: Here's Why Random Sampling is Better

Crafting high-quality prompts and evaluating them has become a pressing issue for AI teams. A well-designed prompt can elicit highly relevant, coherent, and useful responses, while a suboptimal one can lead to irrelevant, incoherent, or even harmful outputs.

To create high-performing prompts, teams need both high-quality input variables and clearly defined tasks. However, since LLMs are inherently unpredictable, quantifying the impact of even small prompt changes is extremely difficult.

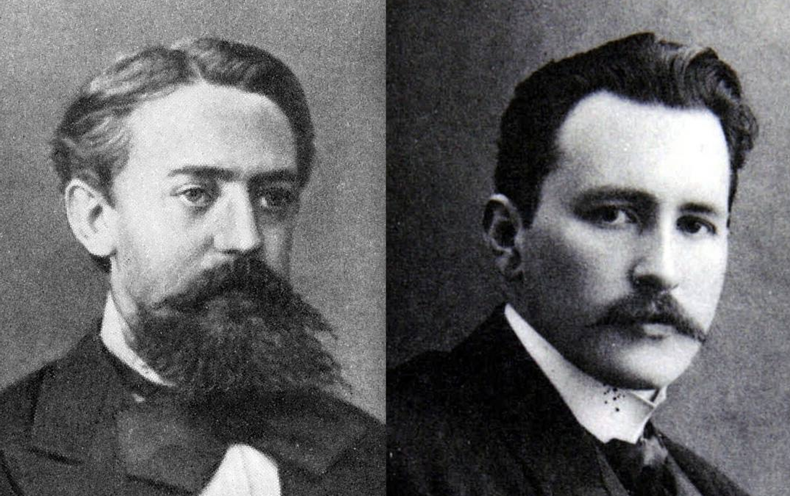

In a recent QA Wolf webinar, Nishant Shukla , the senior director of AI at QA Wolf, and Justin Torre , the CEO and co-founder of Helicone, shared their insights on how they tackled the challenge of effective prompt evaluation.

To address this challenge, teams have traditionally turned to the use of Golden Datasets. Golden Datasets are widely used because they are great for benchmarking and evaluating problems that are well-defined and easily reproducible.

Golden Datasets are meticulously cleaned, labeled, and verified. Teams use Golden Datasets to make sure their applications perform optimally under controlled conditions.

Leave a Comment

Related Posts