Offline llama3 says it sends corrections back to Meta's server

TIL that I'm not better than all the old people on Facebook believing what the scammers tell them. Let that be a lesson to you, don't use a LLM for fact checking.

Just a couple of days ago Meta released their new AI large language model called llama in version 3 and made it Open Source. It's really good, and so much faster than the previous version. With help of Ollama you can install llama3 very quickly and conveniently on your own computer. That means that you're running your queries offline, no need to send all your data to the cloud where they will do with it god knows what.

I started a conversation about What do you personally use AI for? on Lemmy and someone said that they would use AI if it wasn't connected to the Internet. I proposed them to check out llama 3 because it runs locally. They checked with me if it really doesn't connect to any server if you're online? I said that this is correct, which I assumed. But oh boy, as the saying goes. If you ASSUME you make an ass out of U and ME.

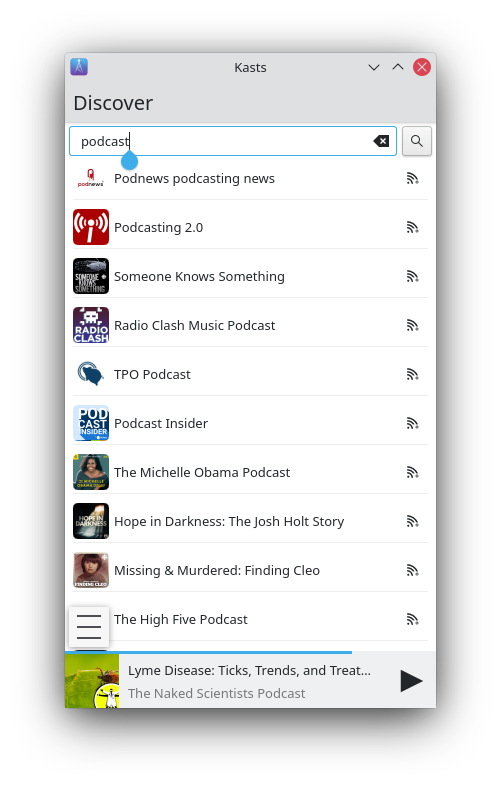

I got curious and asked the model llama3 itself about it and sure enough, it said that even though it does not send our conversation to Meta, it does take my feedback (if I correct it in some way) and sends it to Meta’s servers through the Internet:

.svg)