OpenCoder: A True Open-Source Approach to Code LLMs

In simple terms, this means a Large Language Model that is specifically trained to understand and generate programming code. Such models are built to assist with code-related tasks, such as writing, completing, or explaining code snippets.

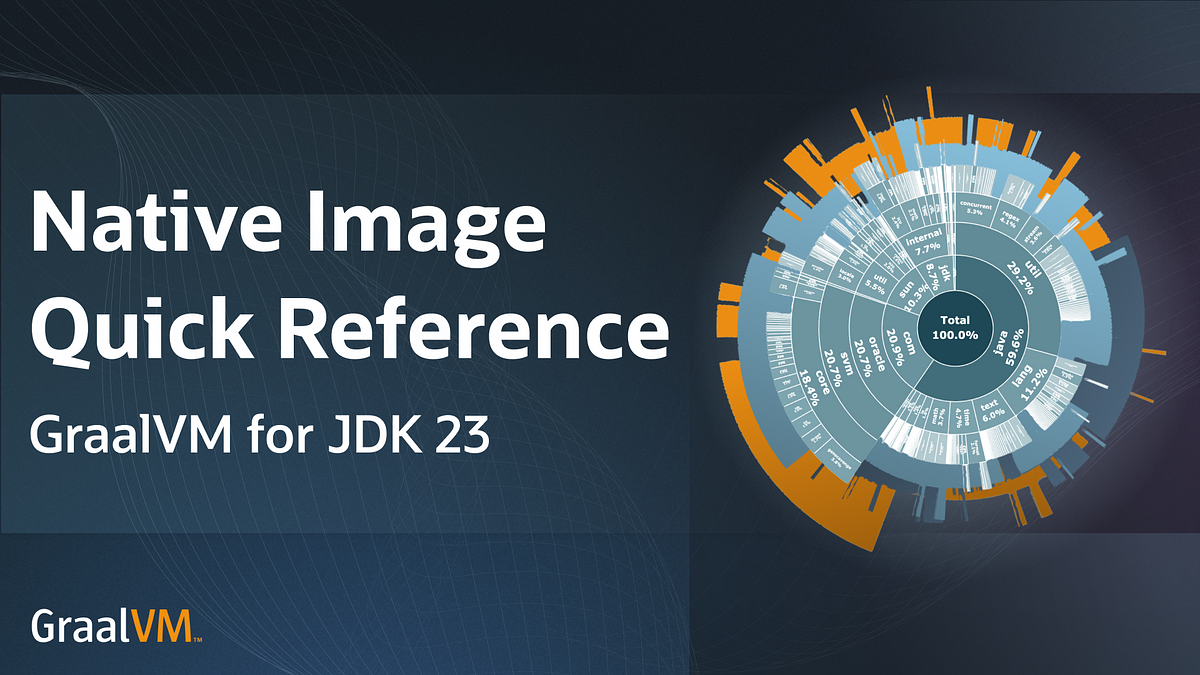

It aims to match the performance of top-tier proprietary and Open Source models while staying fully open. Examples of other open code LLMs are Qwen from Alibaba and LlamaCode from Meta.

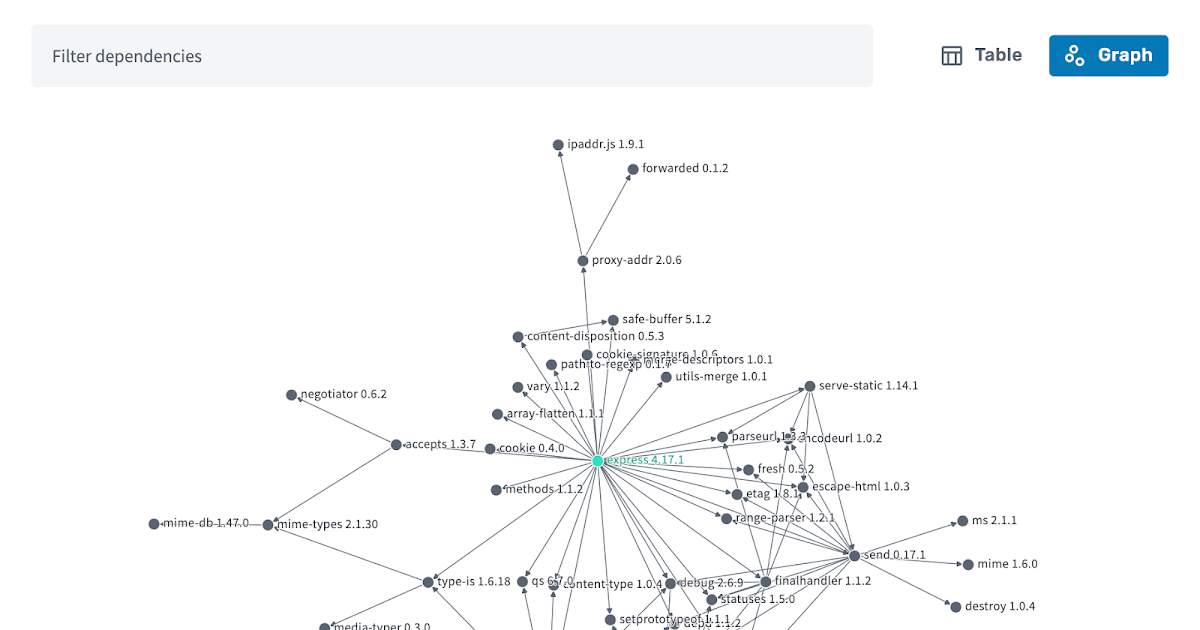

OpenCoder not only gives access to final models and tools but also shares its full training process, including data steps and protocols.

Trained on 2.5 trillion tokens (90% raw code and 10% code-related web data), OpenCoder matches the performance of proprietary models without locking its methods or results behind closed doors.

Leave a Comment

Related Posts